GenAI with LLMs (6) LLM powered applications

This post covers LLM powered applications from the Generative AI With LLMs course offered by DeepLearning.AI.

Model optimizations for deployment

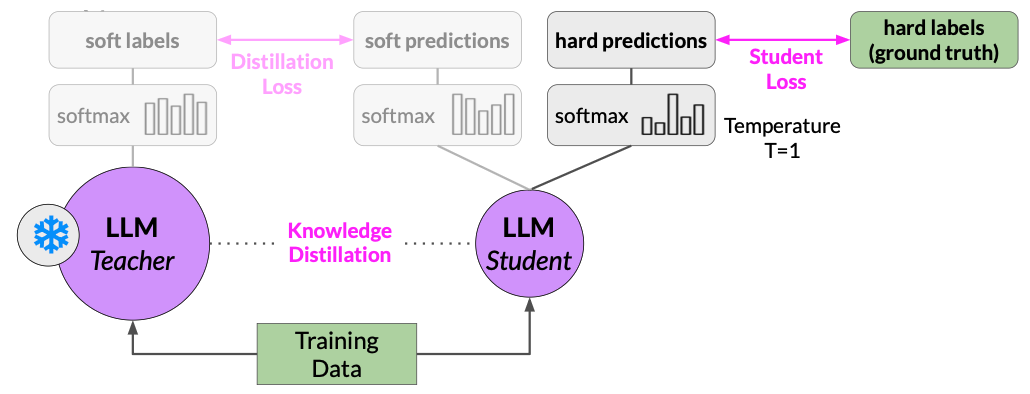

Distillation

Distillation uses a larger model (the teacher model) to train a smaller model (the student model). Then use the smaller model for inference to lower the storage and compute budget. The student model learns to statistically mimic the behavior of the teacher model, either just in the final prediction layer, or in the model’s hidden layers as well.

Distillation workflow:

- Start with a fine-tuned LLM as the teacher model, and create a smaller LLM for student model.

- Freeze the teacher model’s weights and use it to generate completions for the training data. Meanwhile, also generate completions for the training data using the student model.

- The knowledge distillation between teacher and student model is achieved by minimizing a loss function called the distillation loss. To calculate the loss, distillation compares the probability distribution over tokens produced by the student model’s softmax layer to that of the teacher model’s softmax layer.

- Adding a temperature parameter to the softmax function. Why?

- The teacher model is already fine-tuned on the training data, so the probability distribution likely closely matches the ground truth data and won’t have much variation in tokens. That’s why Distillation applies a little trick adding a temperature parameter to the softmax function.

- With a temperature parameter greater than one, the probability distribution becomes broader and less strongly peaked. This softer distribution provides you with a set of tokens that are similar to the ground truth tokens.

- In parallel, you train the student model to generate the correct predictions based on your ground truth training data. Here, you don’t vary the temperature setting, and instead, use the standard softmax function. Distillation refers to the student model outputs as the hard predictions and hard labels. The loss between the hard predictions and hard labels is the student loss.

- The combined distillation and student losses are used to updated the weights of the student model via back propagation.

The key benefit of distillation methods is that the smaller student model can be used for inference in deployment instead of the teacher model. In practice, distillation is not as effective for generative decoder models. It’s typically more effective for encoder only models such as BERT that have a lot of representation redundancy.

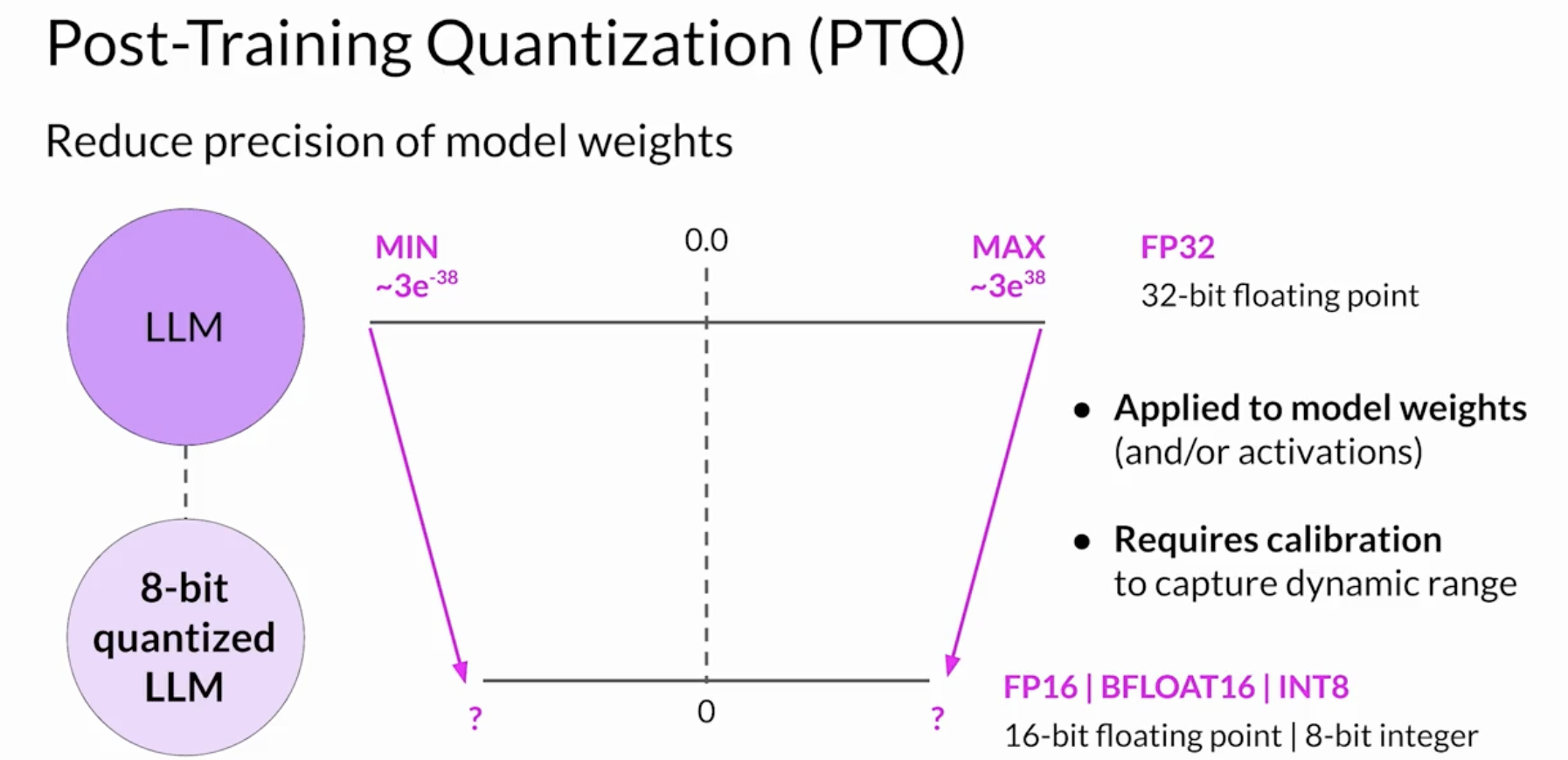

Quantization

We introduced the concept of quantization in the LLM pre-training note. After a model is trained, we can perform post training quantization (PTQ) to optimize for deployment. PTQ transforms a model’s weights to a lower precision representation, such as 16-bit floating point for 8-bit integer.

- To reduce the model size and memory footprint, as well as the compute resources needed for model serving, quantization can be applied to just the model weights or to both weights and activation layers. In general, quantization approaches that include activations can have a higher impact on model performance.

- Quantization also requires an extra calibration step to statistically capture the dynamic range of the original parameter values.

- Trade-off: sometimes quantization results in a small percentage reduction in model evaluation metrics. That reduction can often be worth the cost saving and performance gains.

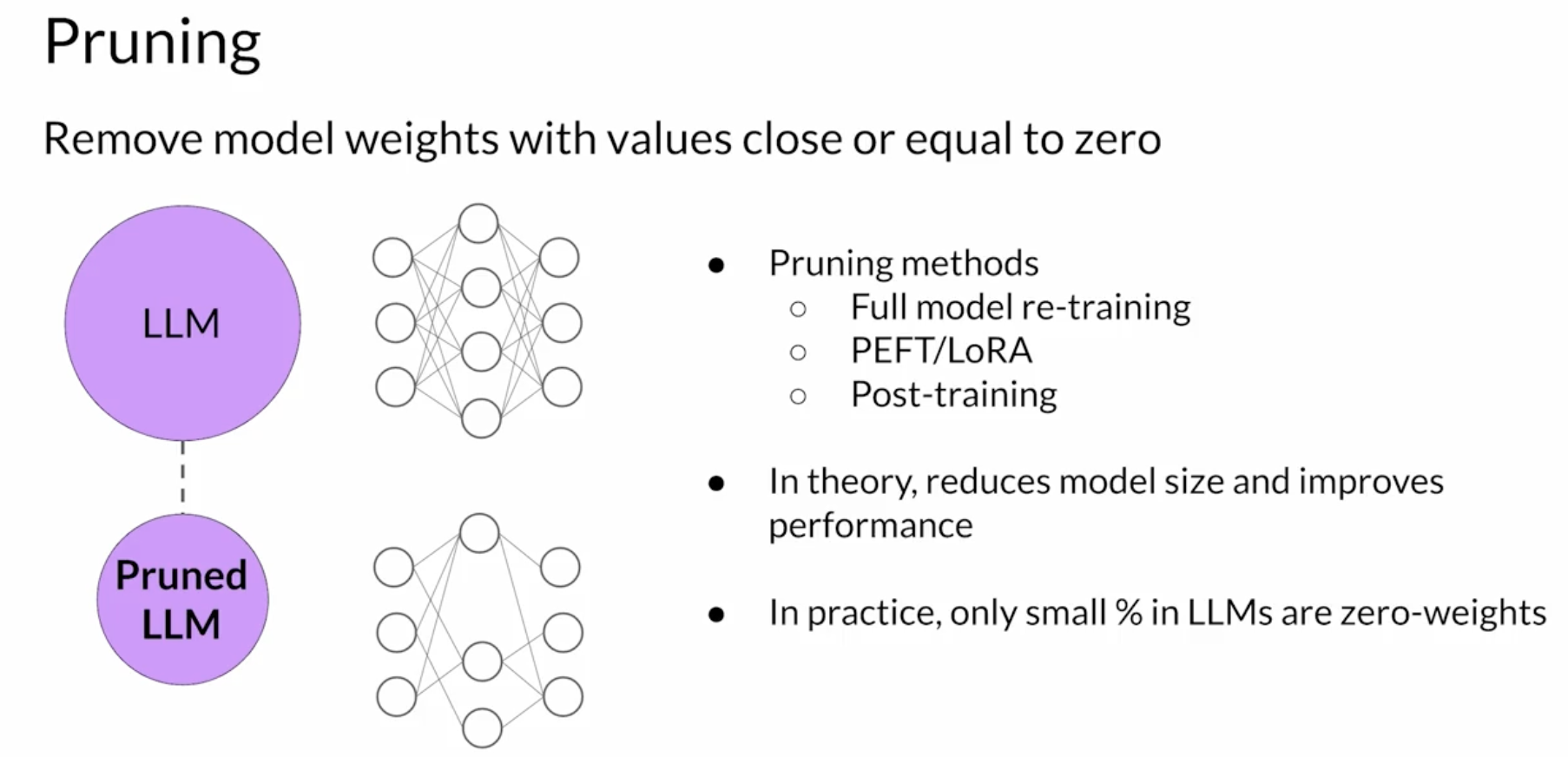

Pruning

Pruning is to reduce model size for inference by eliminating weights that are not contributing much to overall model performance. These are the weights with values very close to or equal to zero.

- Some pruning methods require full retraining of the model, while others fall into the category of parameter efficient fine tuning, such as LoRA.

- There are also methods that focus on post-training pruning.

In theory, pruning reduces model size and improves performance. In practice, however, there may not be much impact on the size and performance if only a small percentage of the model weights are close to zero.

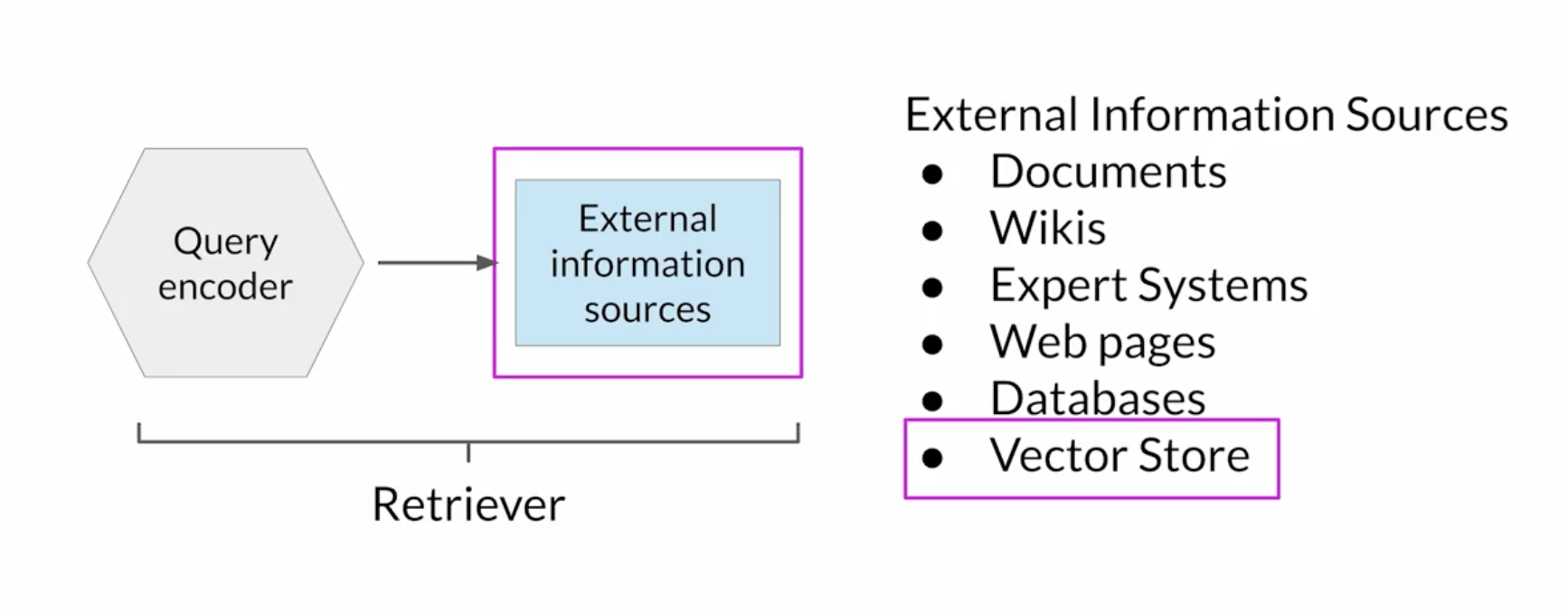

Retrieval augmented generation (RAG)

RAG helps LLMs update their understanding of the world and overcomes the knowledge cutoff issue. While we could retrain the model on new data, this would quickly become expensive, and require repeated retraining to regularly update the model with new knowledge. A more flexible and less expensive way to overcome knowledge cutoff is to give model access to additional external data at inference time.

RAG isn’t a specific set of technologies, but rather a framework for providing LLMs access to data they did not see during training. A number of different implementations exist, and the one you choose will depend on the details of your task, and the format of data to work with.

RAG can be used to integrate multiple types of external information sources. In the following, we briefly introduce using vector store for RAG. Vector store contains vector representation of text, and enables a fast and efficient kind of relevant search based on similarity.

Data preparation for vector store

Two considerations:

- Data must fit inside context window

- The external data sources can be chopped into many chunks, each of which will fit in the context window. Packages like Langchain can handle this.

- Data must be in format that allows its relevance to be assessed at inference time: embedding vectors

- RAG methods take the small chunks of external data and process them through embedding models to create embedding vectors for each. These embedding vectors can be stored in structures called vector stores, which allow for fast searching of datasets and efficient identification of semantically related text.

Vector databases are a particular implementation of a vector store, where each vector is also identified by a key. This allows for the text generated by RAG to include a citation for the document from which it was received.

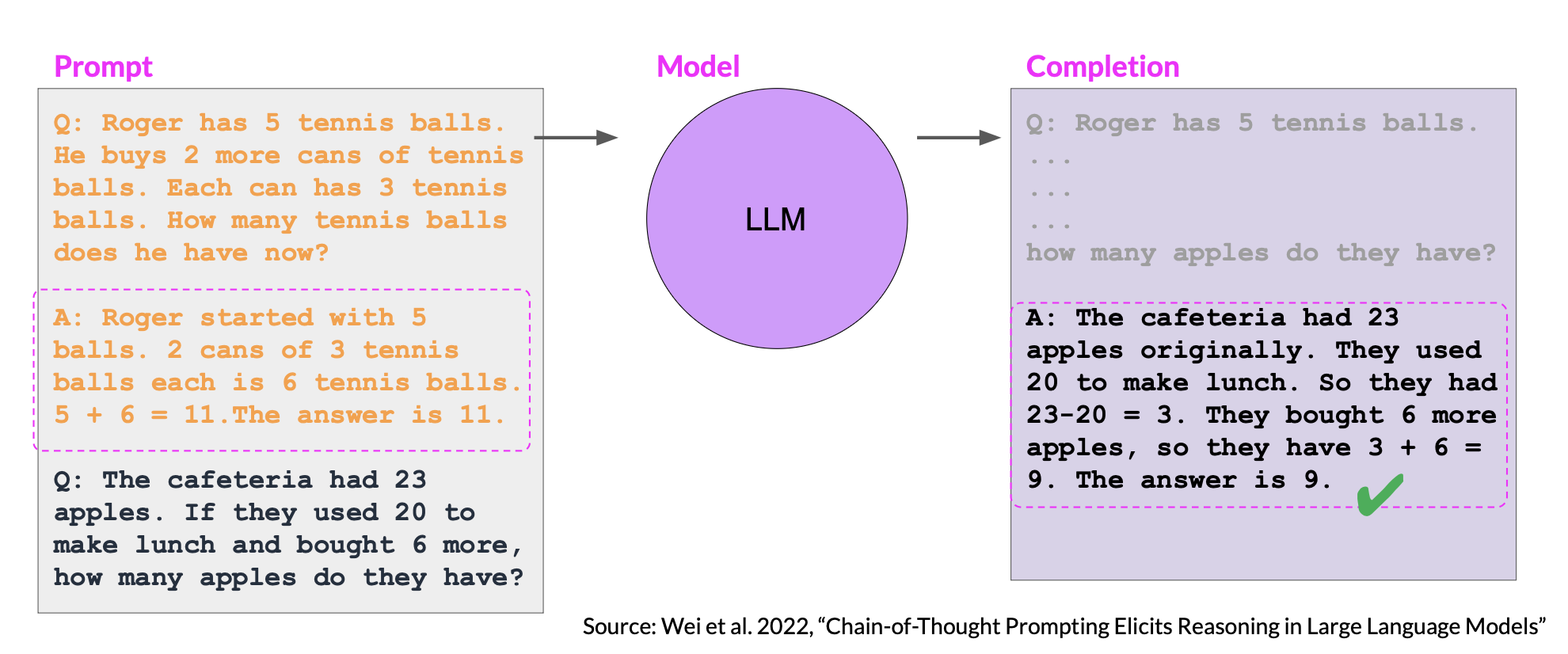

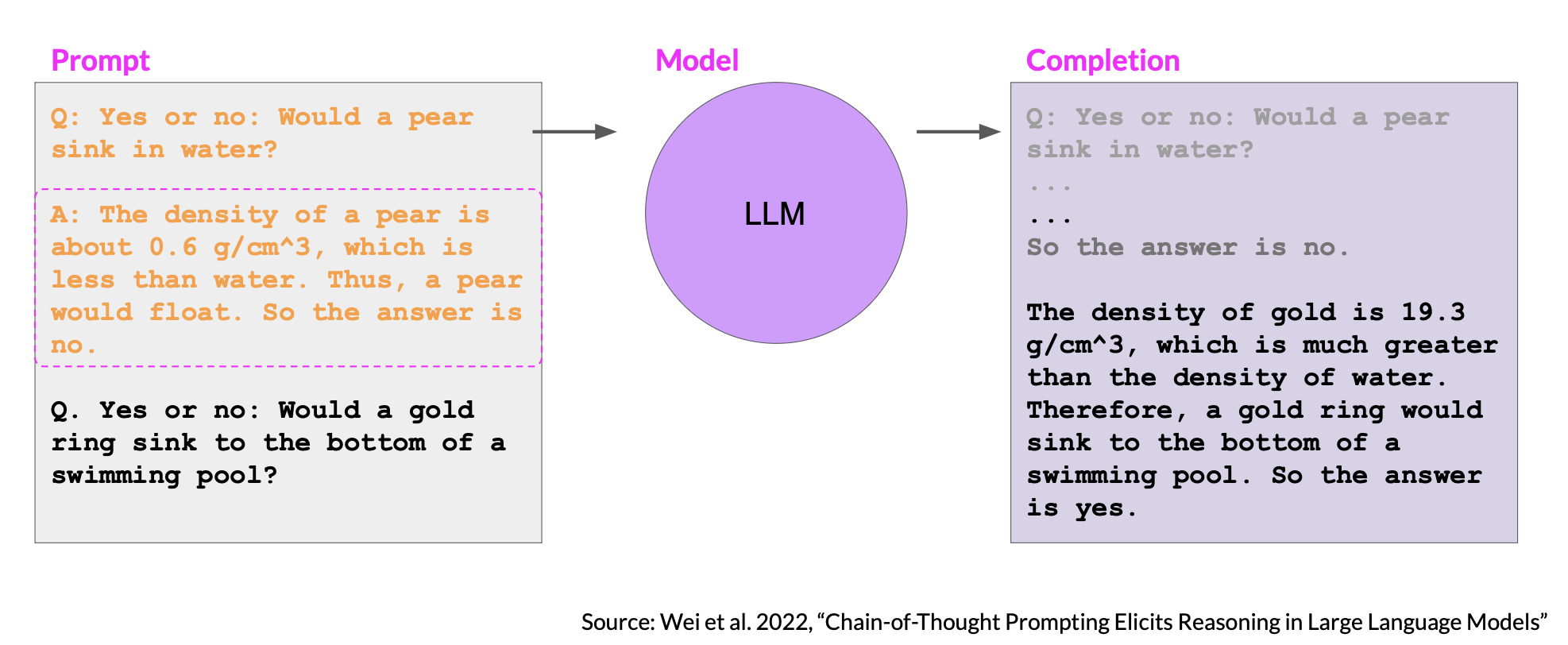

Chain-of-thought prompting

Chain-of-thought prompting works by including a series of intermediate reasoning steps into any examples that you use for one or few-shot inference. By structuring the examples in this way, you’re essentially teaching the model how to reason through the task to reach a solution.

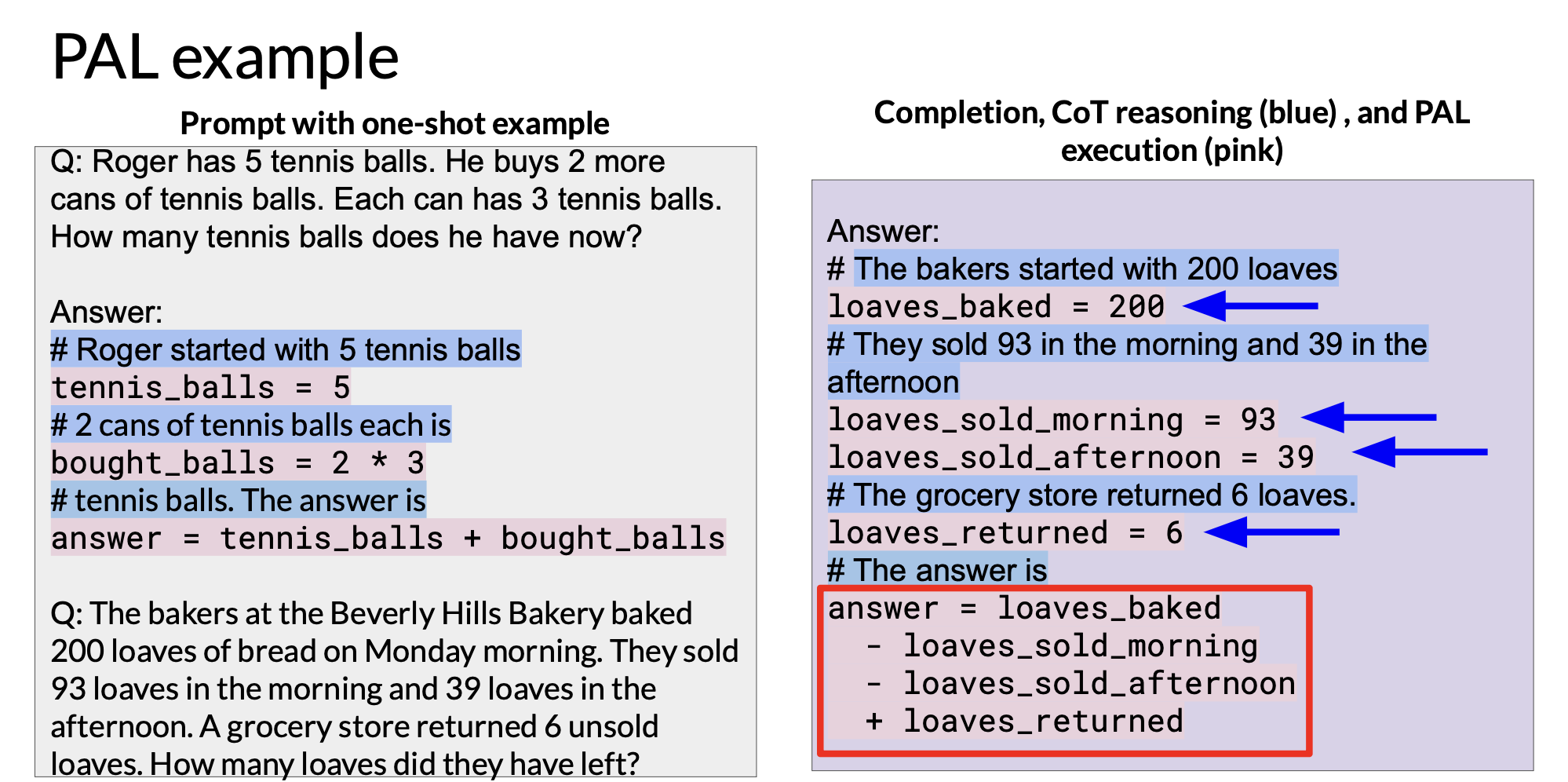

Program-aided language models (PAL)

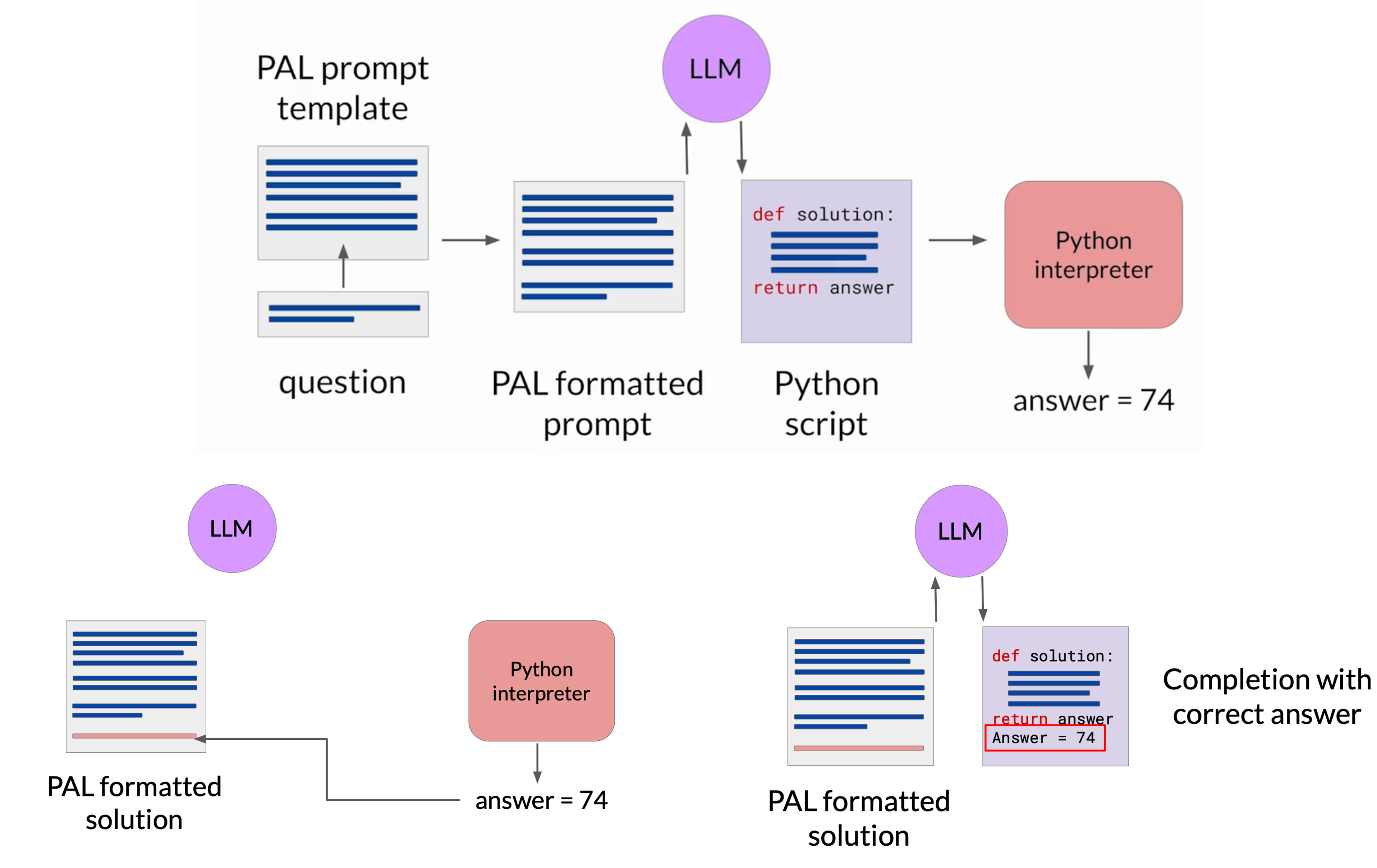

PAL pairs LLM with an external code interpreter to carry out calculations. The strategy behind PAL is to have the LLM generate completions where reasoning steps are accompanied by computer code. This code is then passed to an interpreter to carry out the calculations necessary to solve the problem. You specify the output format for the model by including examples for one or few shot inferences in the prompt.

To prepare for inference with PAL,

- Format prompt to contain one or more examples; each example should contain a question followed by reasoning steps in lines of Python code that solves the problem

- Append the new question to the prompt template; the resulting PAL formatted prompt now contains both the example and the problem to solve

- Pass the combined prompt to LLM, which generates a completion that is in the form of a Python script having learned how to format the output based on the example in the prompt

- Hand off the script to a Python interpreter, which will be used to run the code and generate an answer

- Append the text containing the answer, forming a prompt that includes the correct answer in the context

- Pass the updated the prompt to LLM, and it generates a completion that contains the correct answer

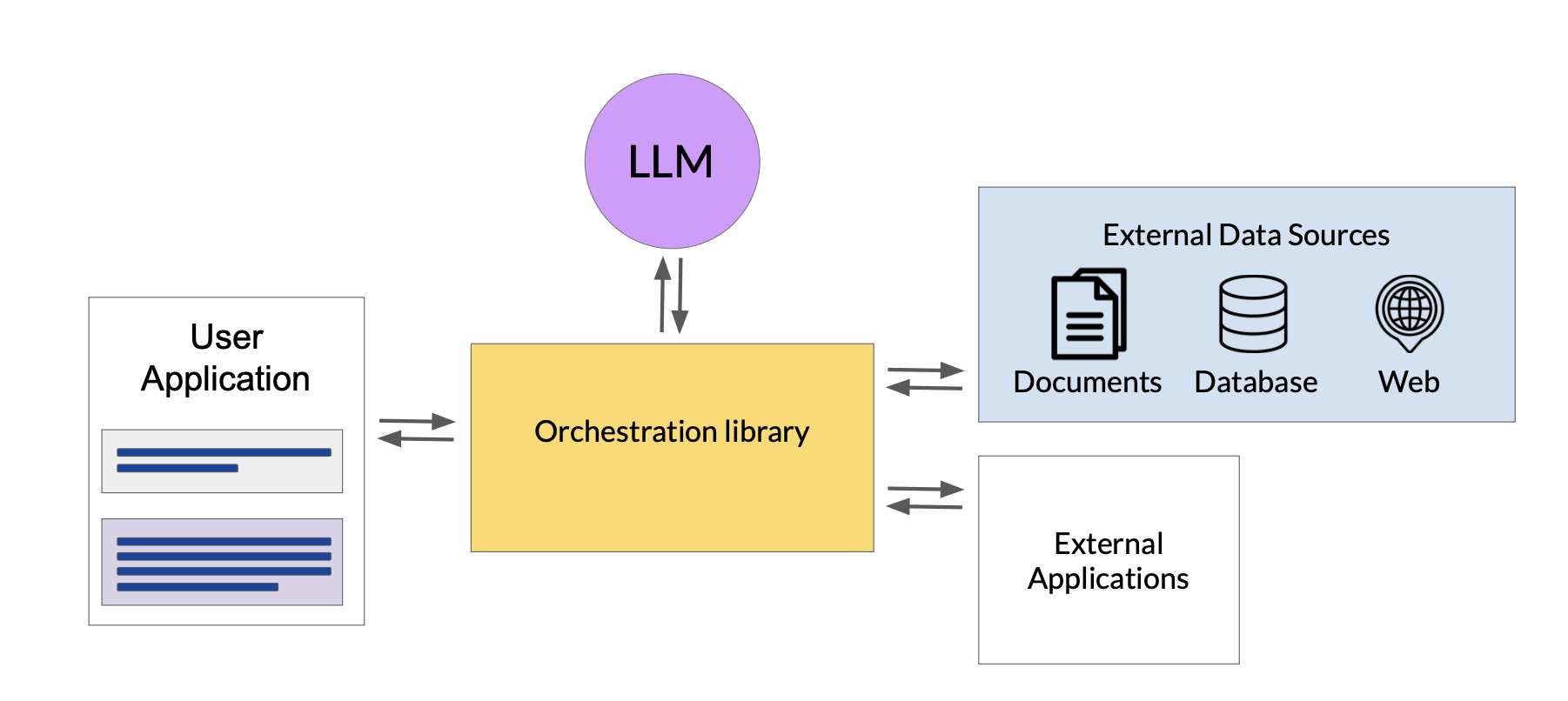

How to automate the process? The orchestrator is a technical component that manage the flow of information and the initiation of calls to external data sources or applications. It can also decide what actions to take based on the information contained in the output of the LLM. The LLM doesn’t have to decide to run the code, it just has to write the script which the orchestrator then passes to the external interpreter to run.

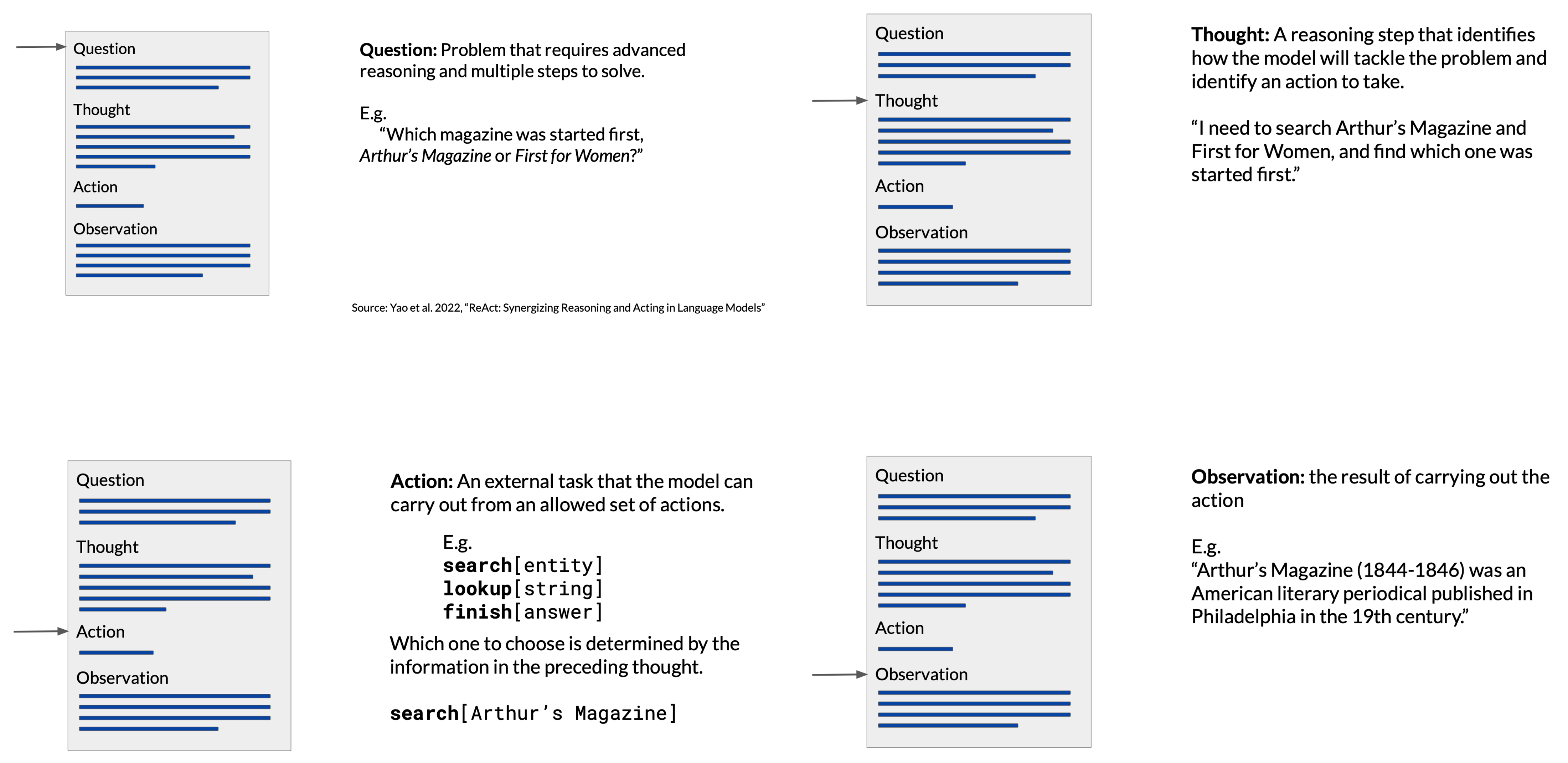

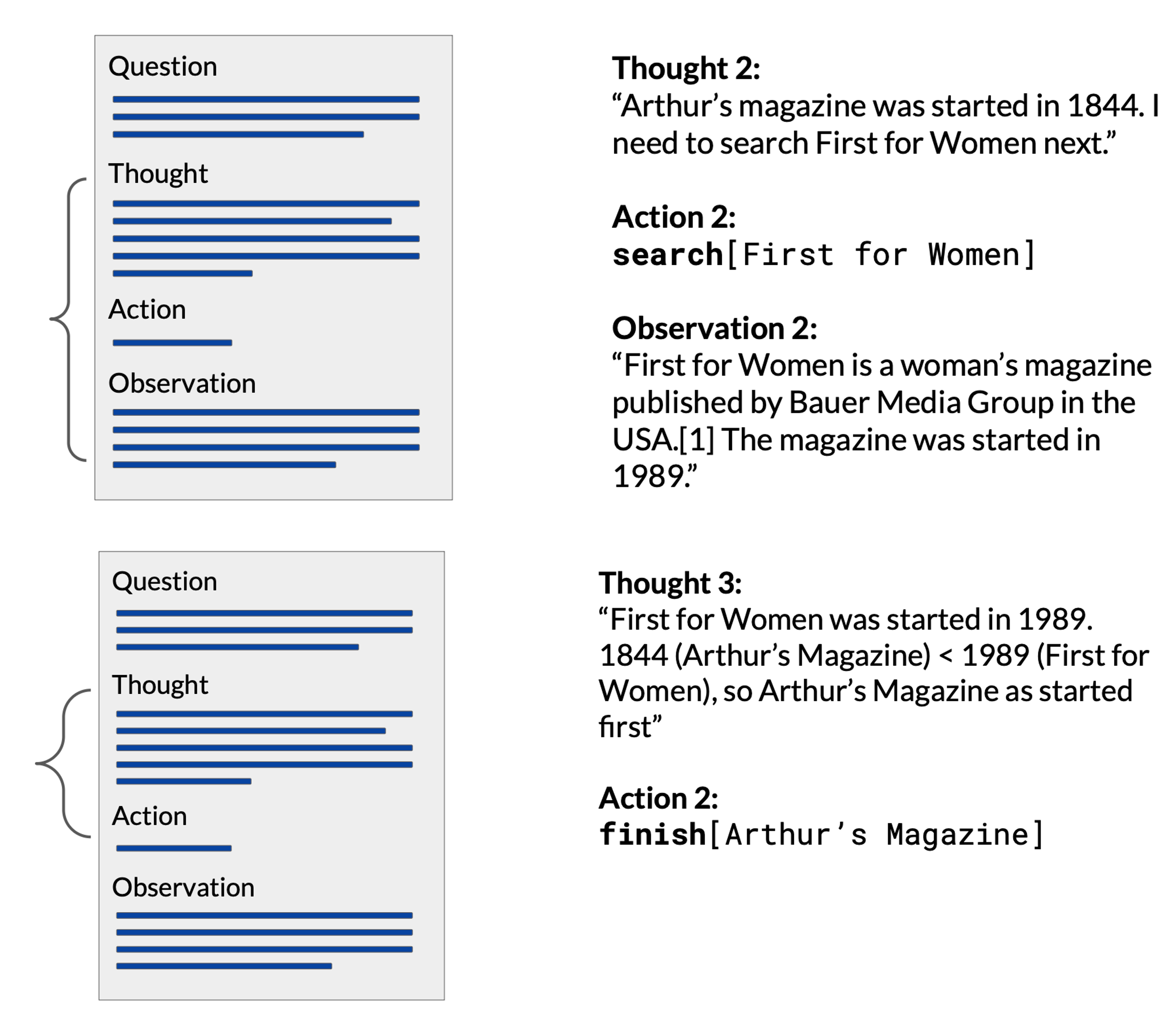

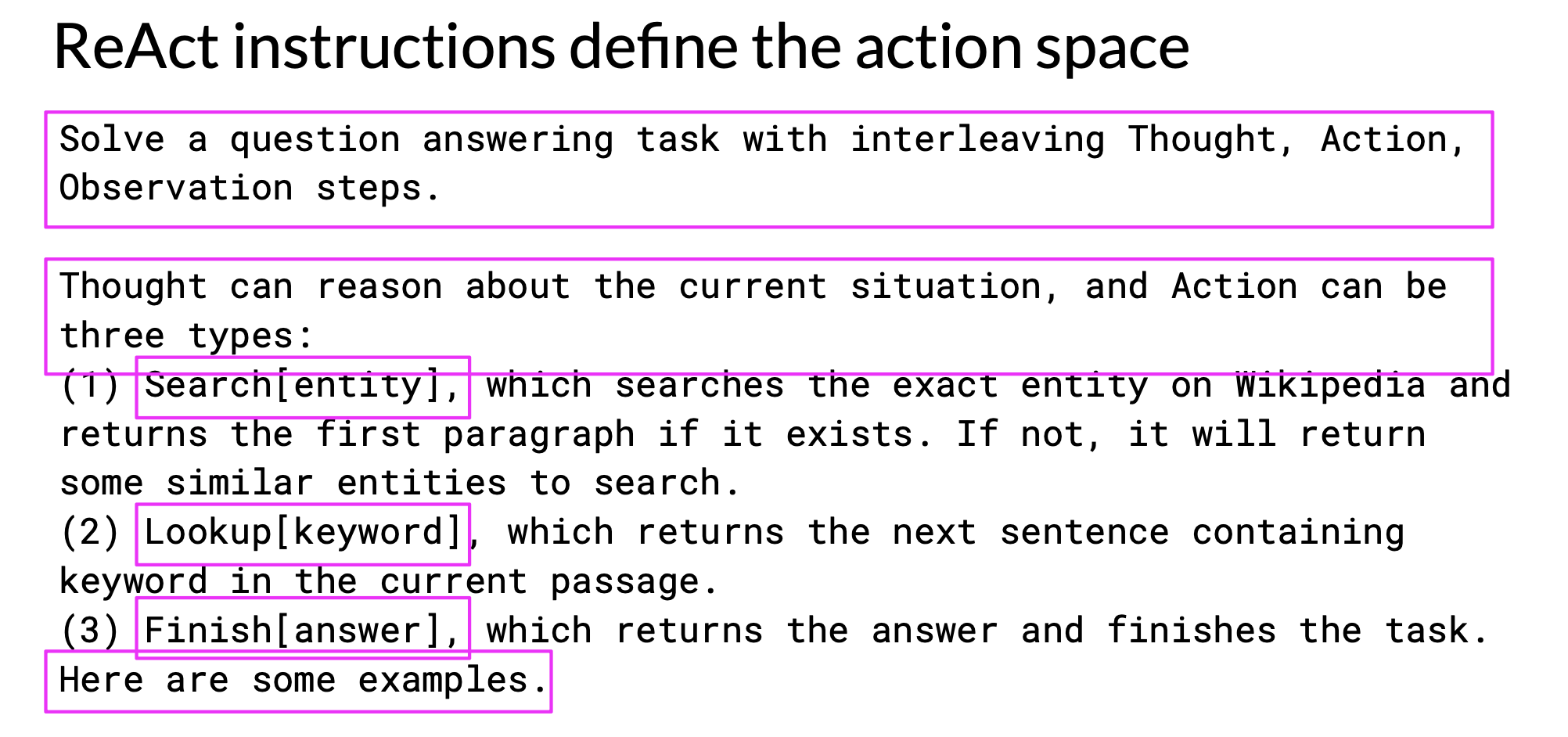

ReAct: Combining reasoning and action

ReAct is a prompting strategy that combines chain-of-thought reasoning with action planning. It uses structured examples to show a LLM how to reason through a problem and decide on actions to take that move it closer to a solution.

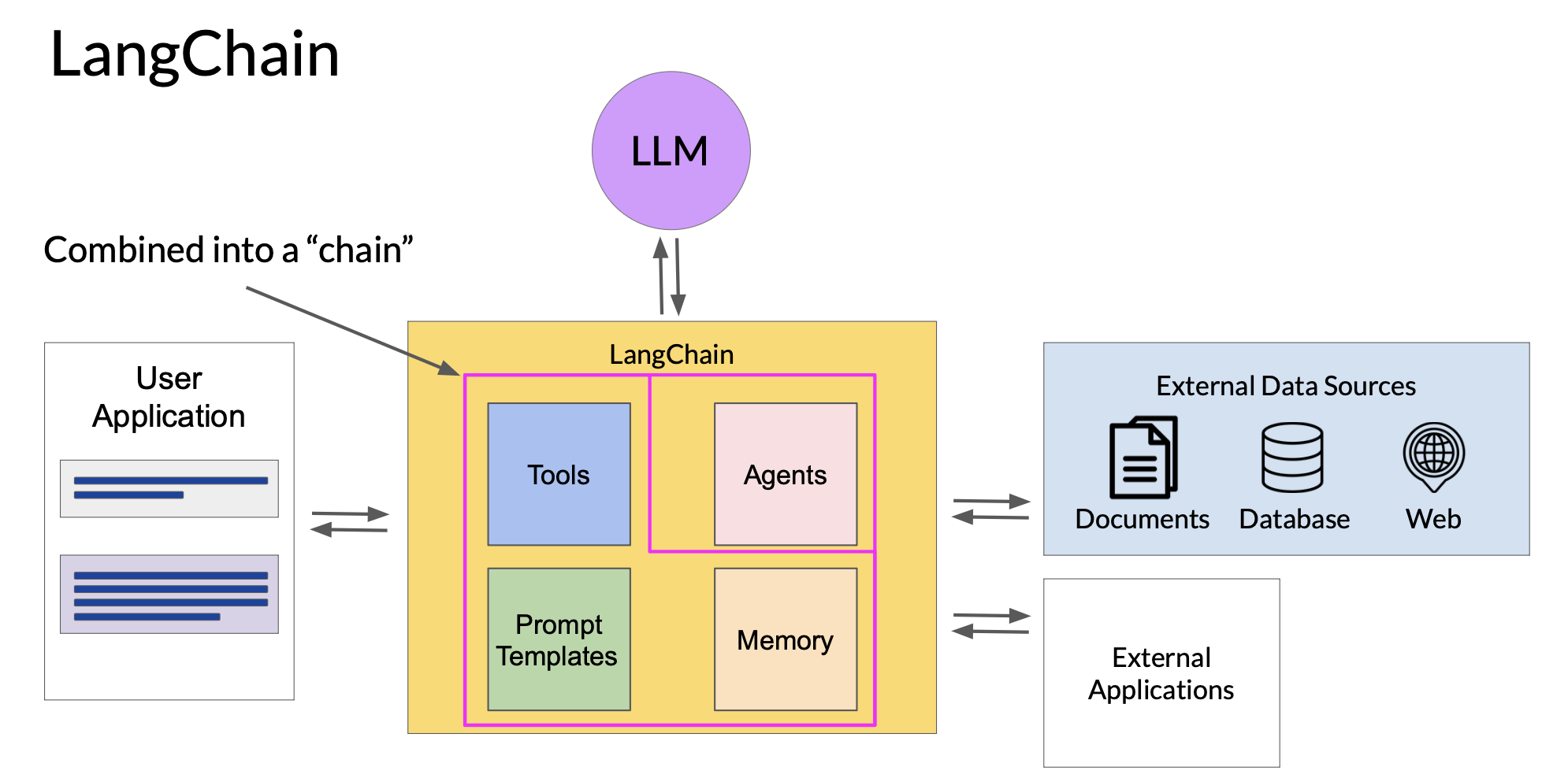

The LangChain framework provides modular pieces that contain the components necessary to work with LLMs. These components include prompt templates for many different use cases that you can use to format both input examples and model completions, and memory that we can use to store interactions with an LLM. The framework also includes pre-built tools that carry out a variety of tasks, including calls to external datasets and various APIs. Connecting a selection of these individual components together results in a chain.

The creators of LangChain have developed a set of predefined chains that have been optimized for different use cases. You can use these off the shelf to quickly get your app up and running. Sometimes your application workflow could take multiple paths depending on the information the user provides. In this case, you can’t use a predetermined chain, but instead we’ll need the flexibility to decide which actions to take as the user moves through the workflow. You can use an agent to interpret the input from the user and determine which tool or tools to use to complete the task. LangChain currently includes agents for both PAL and ReAct, among others. Agents can be incorporated into chains to take an action or plan and execute a series of actions.

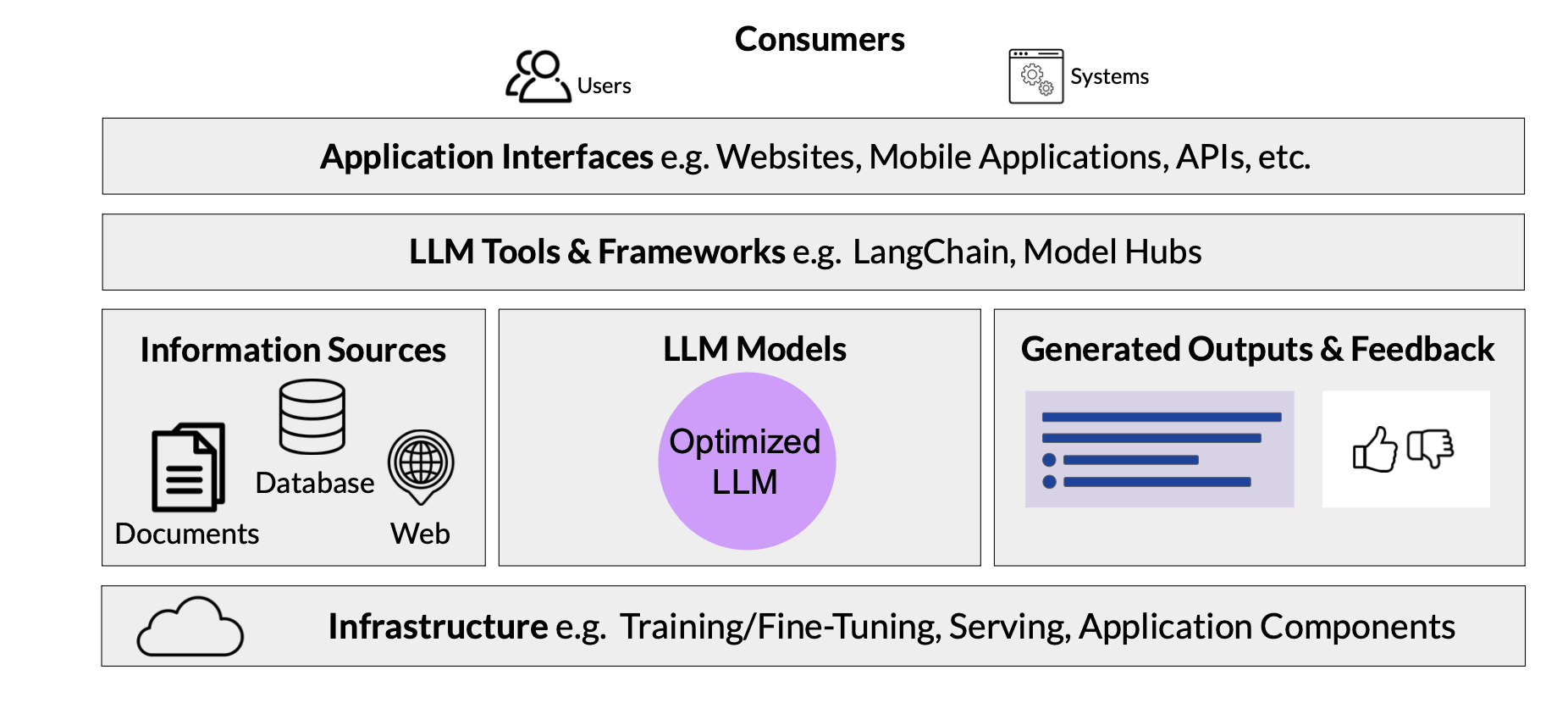

LLM application architectures

References

- Chain-of-thought Prompting Elicits Reasoning in Large Language Models

- Paper by researchers at Google exploring how chain-of-thought prompting improves the ability of LLMs to perform complex reasoning.

- PAL: Program-aided Language Models

- This paper proposes an approach that uses the LLM to read natural language problems and generate programs as the intermediate reasoning steps.

- ReAct: Synergizing Reasoning and Acting in Language Models

- This paper presents an advanced prompting technique that allows an LLM to make decisions about how to interact with external applications.

- LangChain Library (GitHub)

- This library is aimed at assisting in the development of those types of applications, such as Question Answering, Chatbots and other Agents. You can read the documentation here

- Who Owns the Generative AI Platform?

- The article examines the market dynamics and business models of generative AI.