GenAI with LLMs (3) Instruction fine-tuning

This post covers instruction fine-tuning from the Generative AI With LLMs course offered by DeepLearning.AI.

LLM fine-tuning at a high level

Why we need LLM fine-tuning

Recall In-context learning: zero-shot, one-shot, and few-shot inference. See this note for more details.

We need LLM fine-tuning because in-context learning has several drawbacks:

- For smaller models, the in-context learning doesn’t always work, even when 5 or 6 examples are included.

- Any examples you include in your prompt take up valuable space in the context window, reducing the amount of room you have to include other useful information.

What is LLM fine-tuning

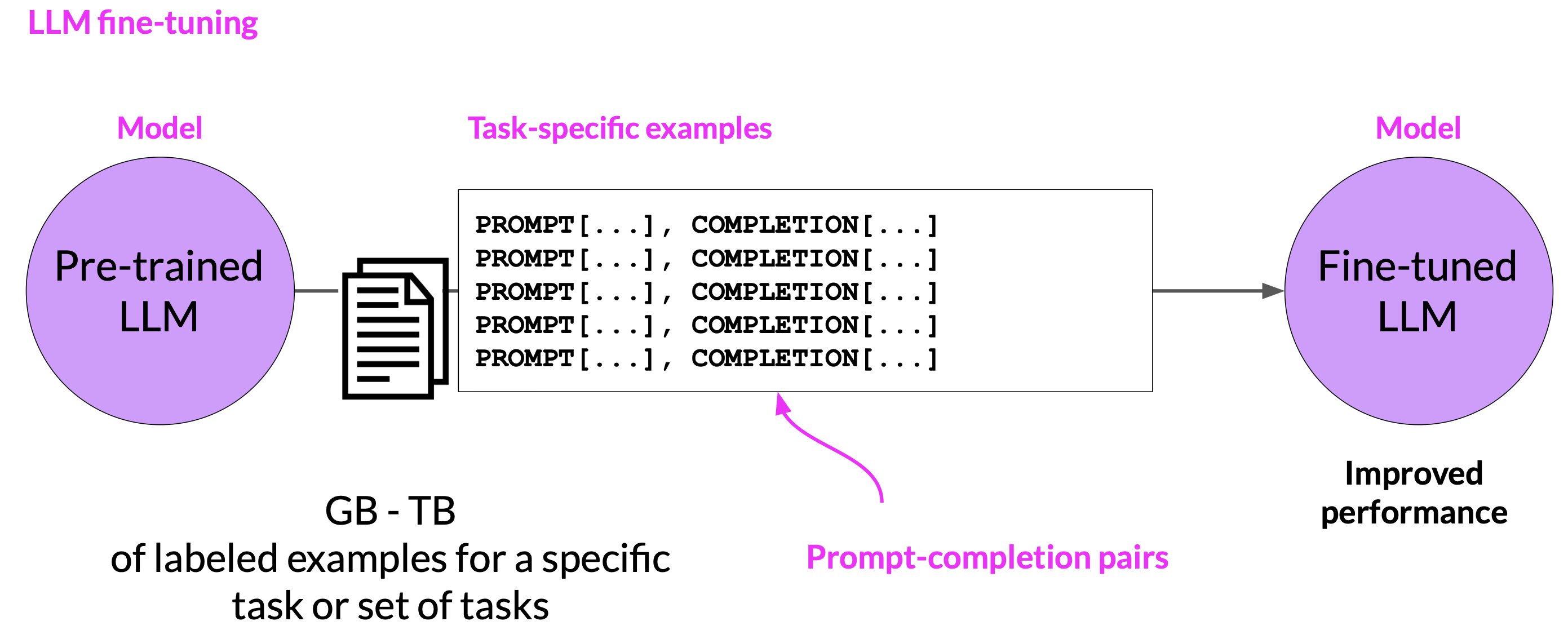

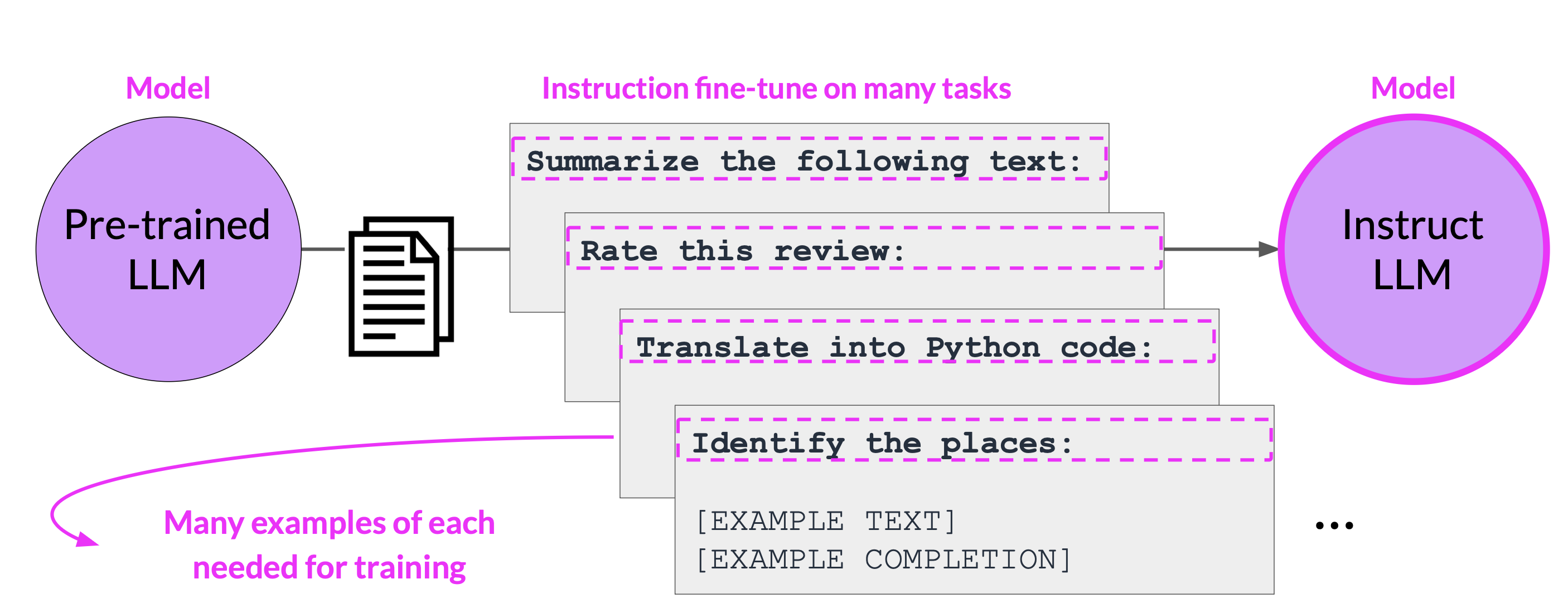

Fine-tuning is a supervised learning process, where you use a dataset of labeled examples to update the weights of the LLM.

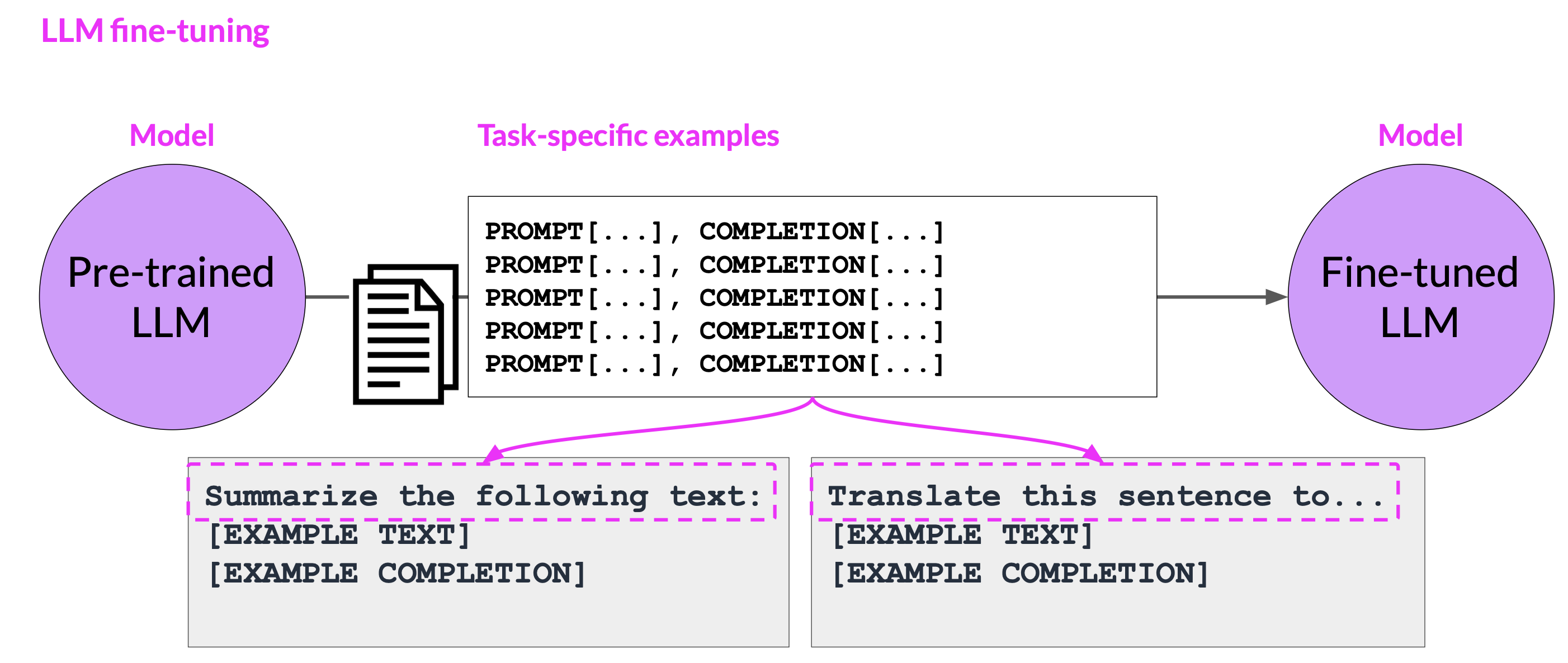

Instruction fine-tuning trains the model using examples demonstrating how it should respond to a specific instruction. The labeled examples are prompt-completion pairs; the fine-tuning process extends the training of the model to improve its ability to generate good completions for a specific task.

Each example in the prompt-completion pairs datasets begins with instructions. For example, if you want to fine-tune your model to improve its summarization ability, you’d build up a dataset of examples that begin with instruction “summarize the following text” or a similar phrase; if you are improving the model’s translation skills, your examples would include instructions like “translate this sentence”. These examples allow the model to learn to generate responses following given responses.

Instruction fine-tuning, where all of the model’s weights are updated, is known as full fine-tuning. The process results in a new version of the model with updated weights. Note that just like pre-training, full fine-tuning requires enough memory and compute budget to store and process all the gradients, optimizers, and other components that are being updated during training.

LLM fine-tuning process

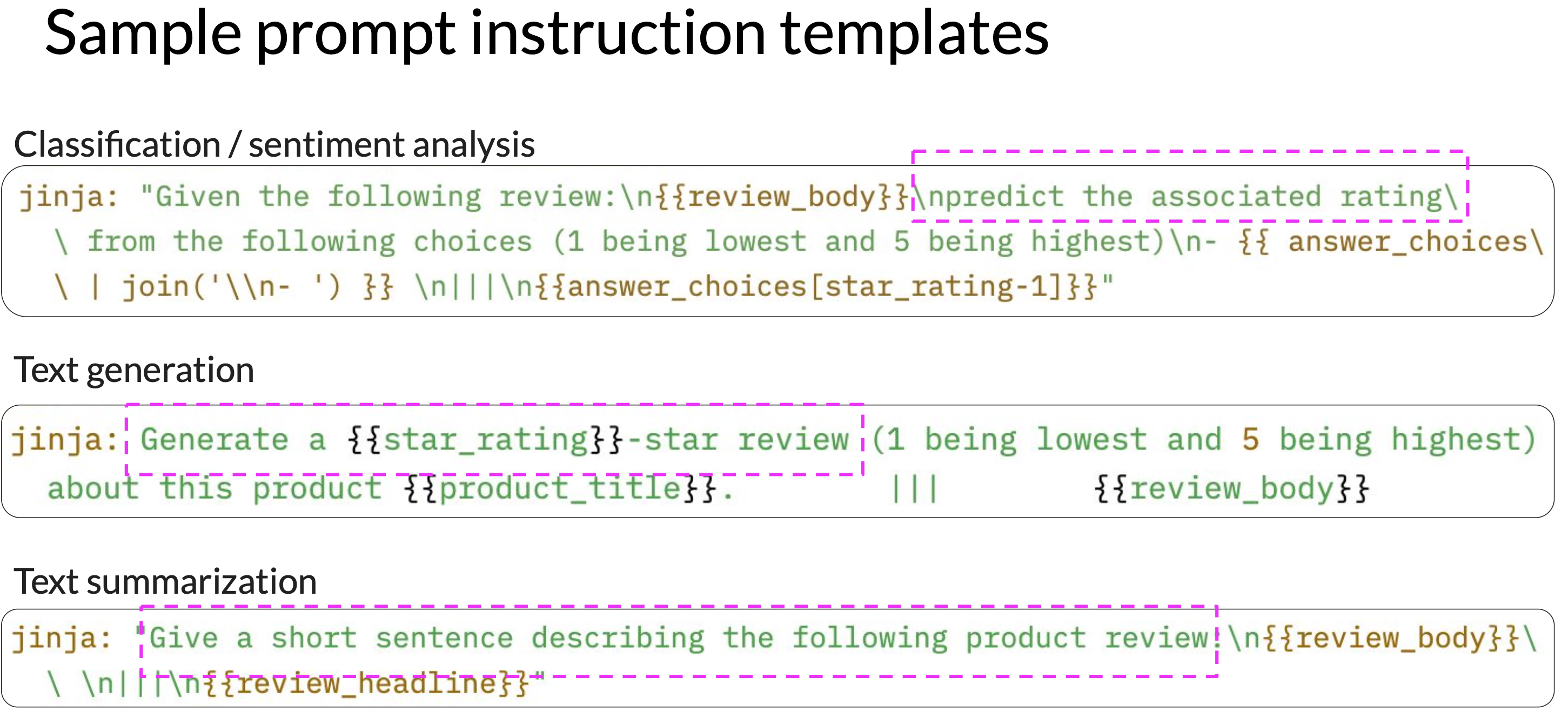

First step of instruction fine-tuning is to prepare your training data. There is much publicly available data that has been used to train earlier generations of LLMs, but not all of them are formatted as instructions. Developers have assembled prompt template libraries that can be used to take existing dataset. For example,turn the large dataset of Amazon product review into instruction prompt datasets for fine-tuning (see Figure 3, source). In each case, you pass in the original review, here called review_body, to the template, where it gets inserted into the text that starts with an instruction. The result is a prompt that now contains both an instruction and the example from the dataset.

Once the instruction dataset is ready, we divide the dataset into training, validation, test split.

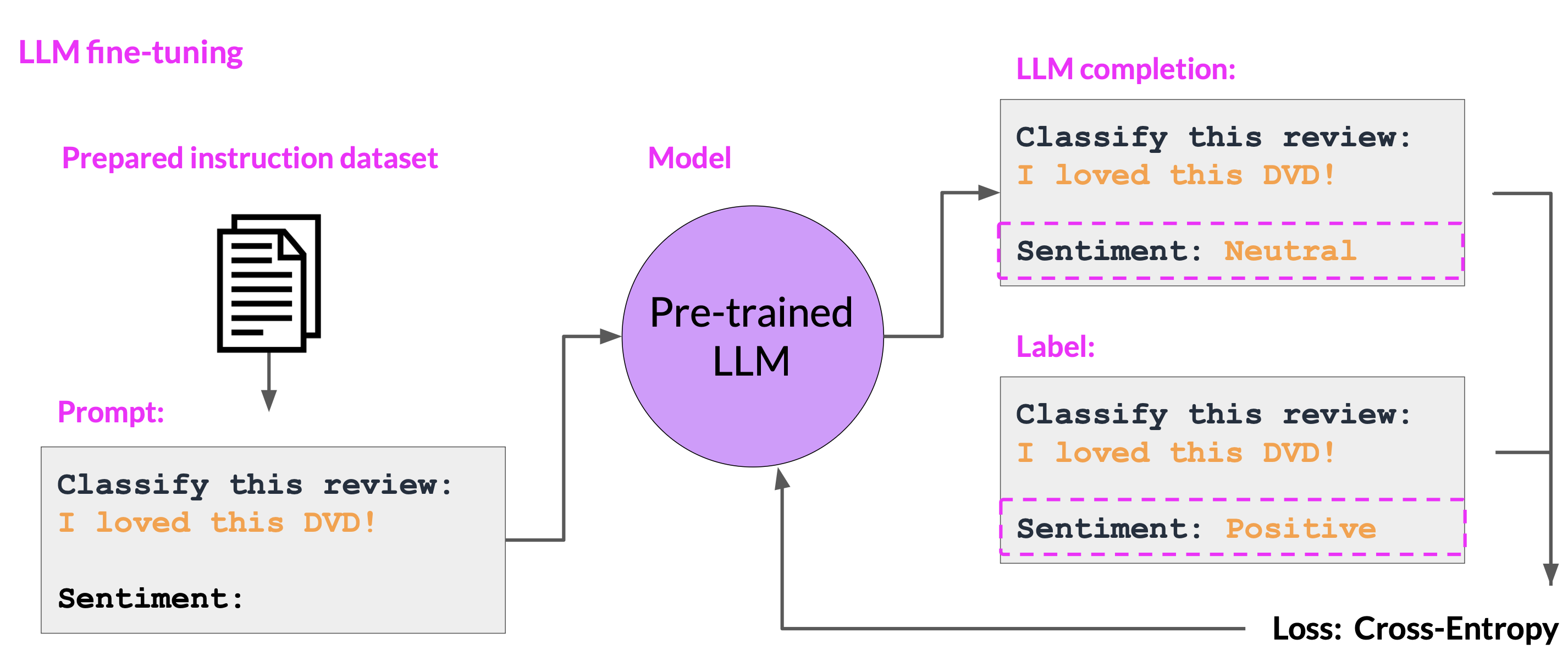

During fine-tuning, we select prompts from the training set and pass them to the LLM which then generates completions. Next, we compare LLM completion with the response specified in the training data.

Recall that the output of an LLM is a probability distribution across tokens. So we can compare the distribution of the completion and that of the training label, and use the standard cross entropy function to calculate loss between the two token distributions. Then use the calculated loss to update model weights in standard backpropagation. Do this for many batches of prompt completion pairs and over several epochs, update the weights so that the model’s performance on the task improves. Then get the validation_accuracy using the holdout validation set, and get the test_accuracy once you apply the model to the test set.

Single task vs. Multi-task

Single task fine-tuning

Good results can be achieved with relatively few examples (often 500-1000 examples) for single task fine-tuning. However, fine-tuning on a single task can lead to catastrophic forgetting, i.e. fine-tuning significantly improves performance of the model on a specific task but degrades performance on other tasks.

How to avoid catastrophic forgetting?

- First of all, you might not have to if you only care about the task you fine-tuned for

- Fine-tune on multiple tasks at the same time

- Consider Parameter Efficient Fine-tuning (PEFT)

Multi-task fine-tuning

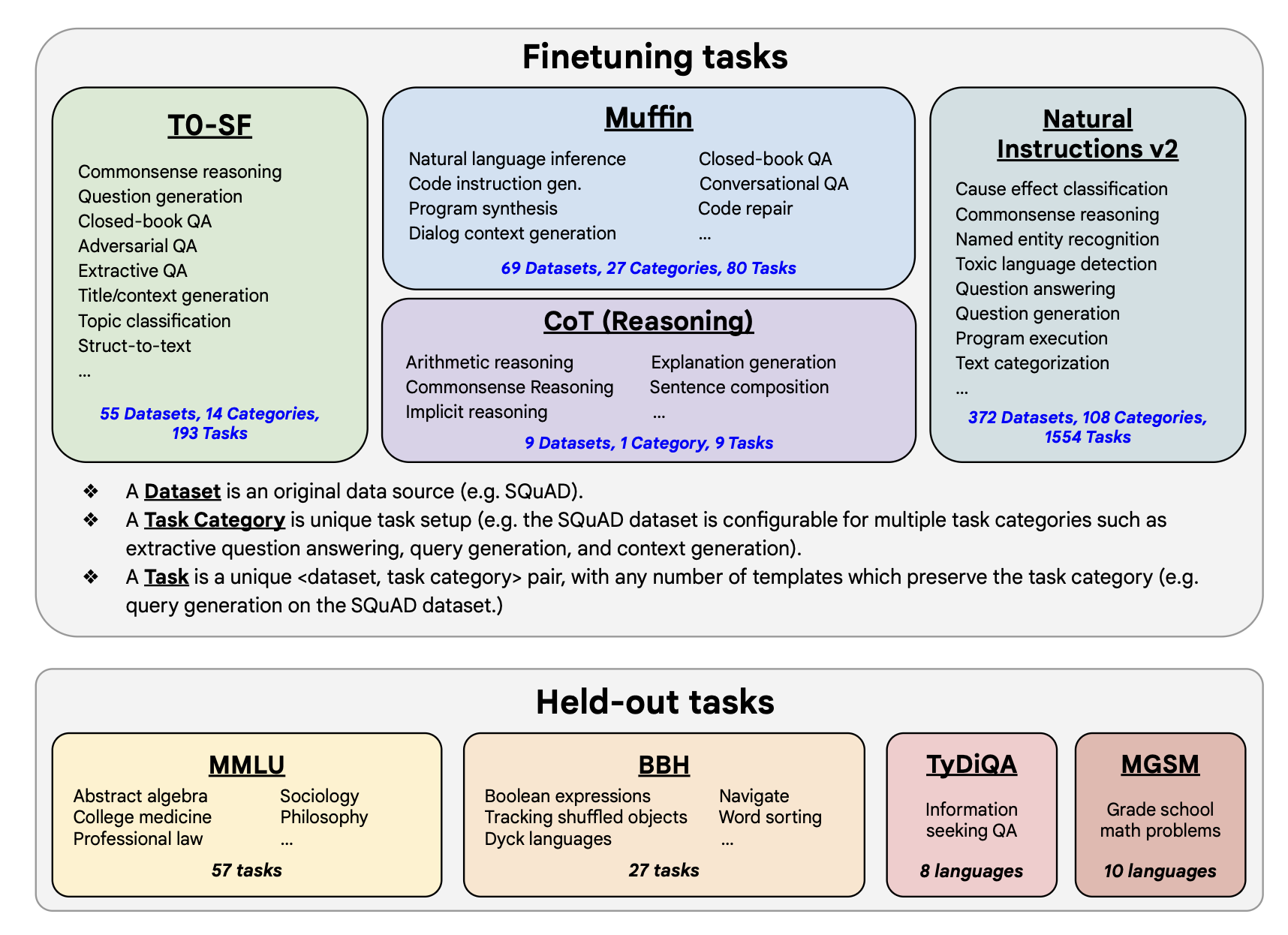

Over many epochs of training, the calculated losses across examples are used to update the weights of the model, resulting in an instruction fine-tuned model that learned how to be good at many different tasks simultaneously.

Drawback to multi-task fine-tuning: requires a lot of data. However, it can be very worthwhile and worth the effort to assemble this data. The resulting models are often very capable and suitable for use in situations where good performance at many tasks is desirable.

Instruction fine-tuning with FLAN

FLAN == Fine-tuned LAnguage Net. FLAN models refer to a specific set of instructions used to perform instruction fine-tuning. FLAN-T5 (fine-tuned version of pre-trained T5 model) is a great, general purpose, instruct model.

LLM evaluation

Metrics: ROUGE

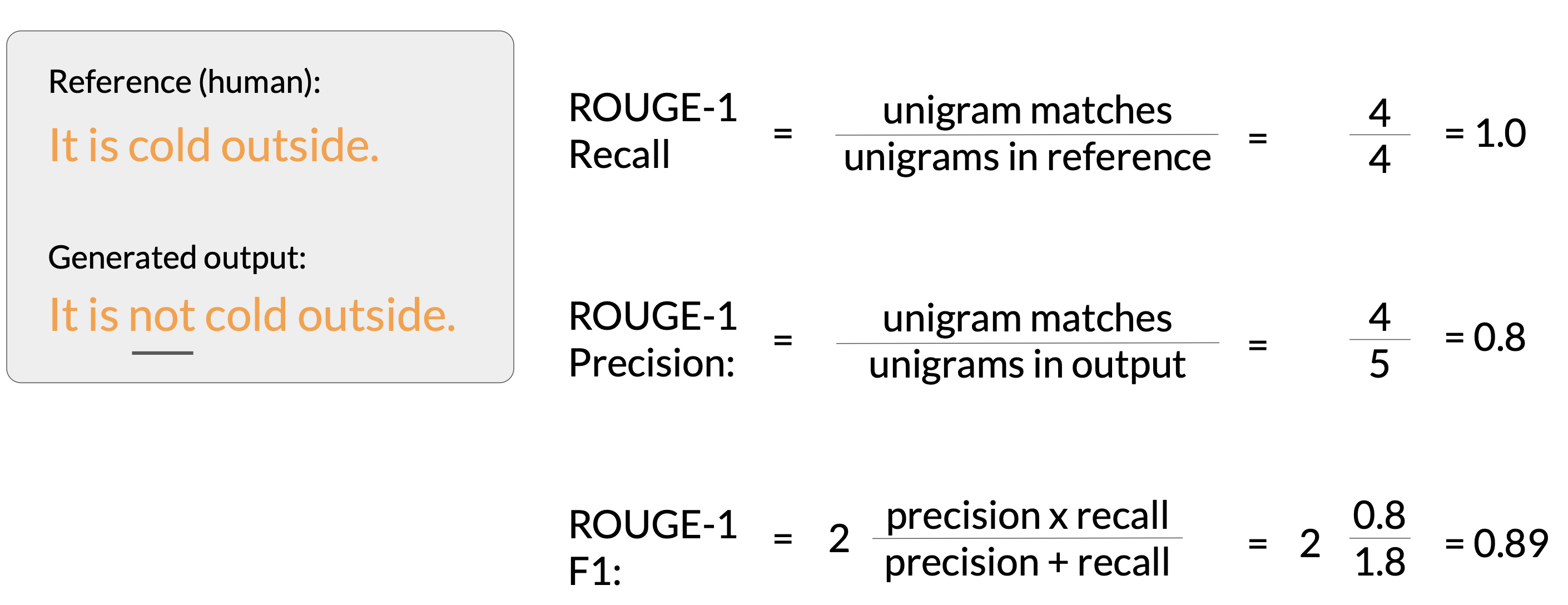

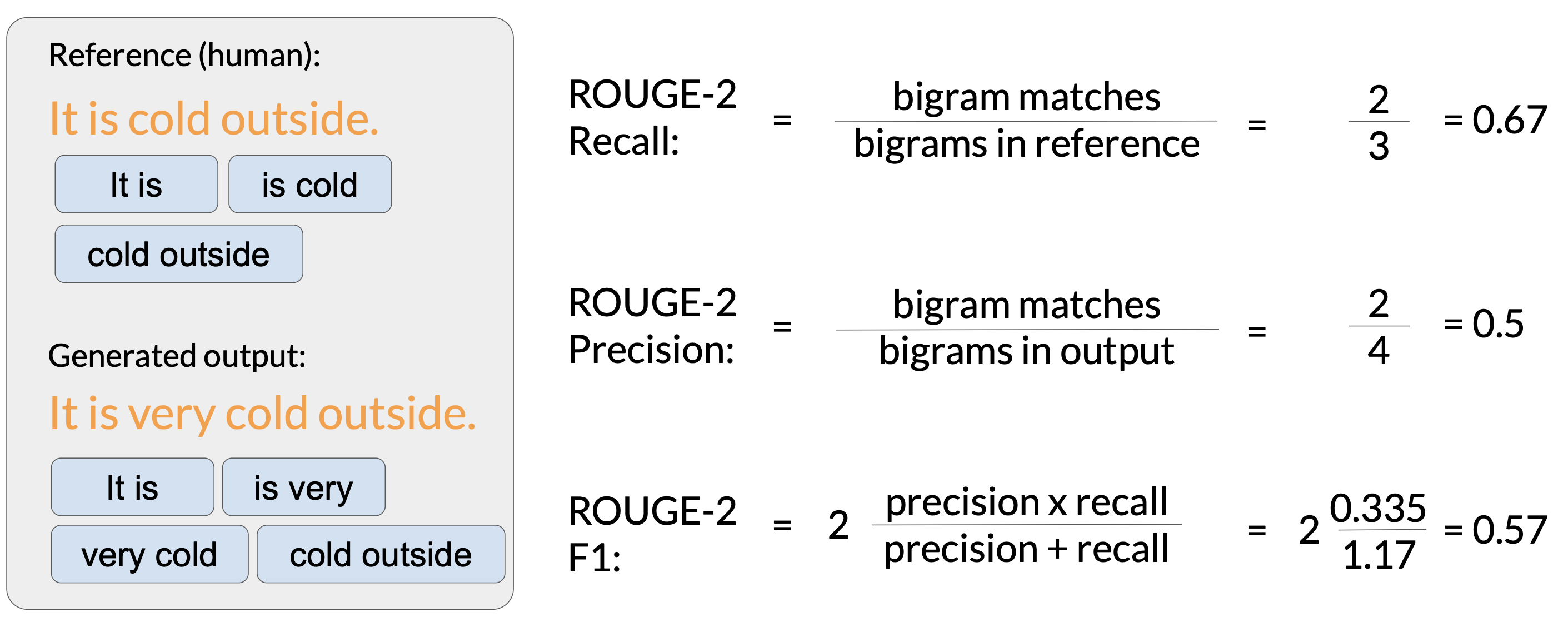

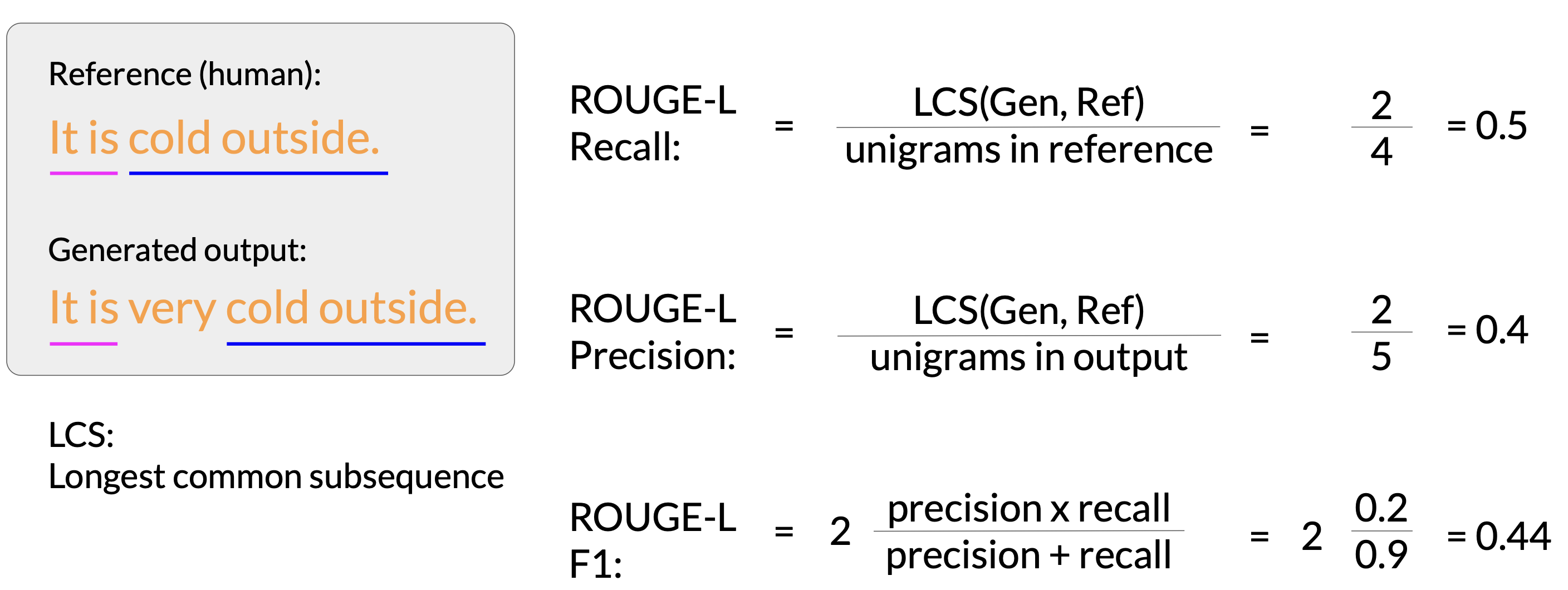

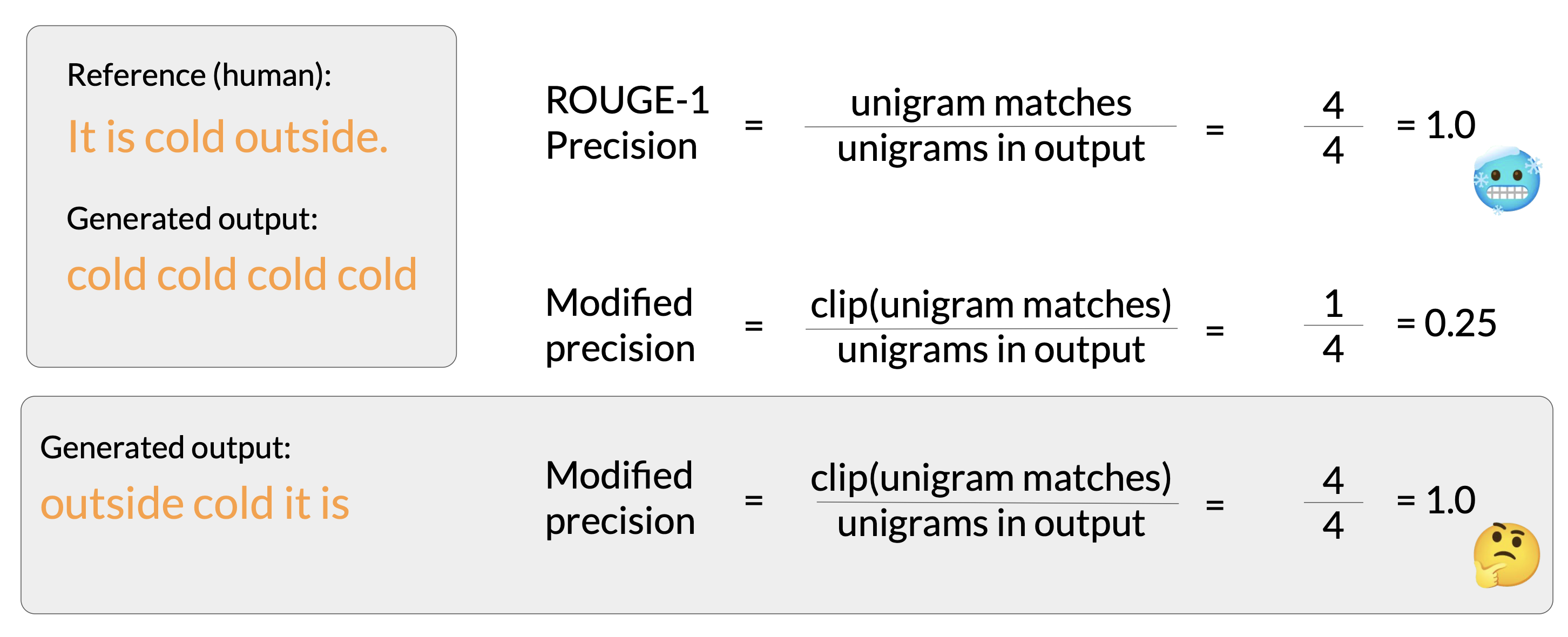

ROUGE is used for text summarization and text generation tasks. Figures in below demonstrate usage of ROUGE-1, ROUGE-2, ROUGE-L, and ROUGE clipping.

ROUGE-1 measures the overlap of unigrams (single words) between the generated and reference texts.

ROUGE-2 measures the overlap of bigrams (two-word sequences) between the generated and reference texts.

ROUGE-L measures the longest common subsequences (LCS) between the generated and reference texts, capturing setence-level fluency and structure.

ROUGE clipping limits the count of overlapping n-grams to the maximum number that appears in the reference, preventing the generated text from getting extra credit for repeated words.

Metrics: BLEU SCORE

BLEU SCORE is used for text translation. Below is the core BLEU formula, from Papineni et al. 2002.

\[\text{BLEU} = \text{BP} \cdot \exp\left( \sum_{n=1}^{N} w_n \log p_n \right)\]- BLEU: The final BLEU score (0 to 1), evaluating how closely the generated text matches the reference.

- BP: Brevity Penalty — penalizes candidates that are shorter than the reference.

- \(\exp\): Exponential function, used to combine the log-precisions into a geometric mean.

- \(w_n\): Weight for each n-gram order (e.g., 0.25 when using up to 4-grams).

- \(p_n\): Modified precision for n-grams of size \(n\), with clipping to avoid over-counting.

- \(N\): Maximum n-gram order (usually 4 in practice).

Benchmarks

Selecting an evaluating dataset is vital to an accurate evaluation of model performance. Example evaluation benchmarks include GLUE, SuperGLUE, MMLU (Massive Multitask Language Understanding), HELM, Big-bench, etc.

References

- Scaling Instruction-Finetuned Language Models

- Scaling fine-tuning with a focus on task, model size and chain-of-thought data.

- Introducing FLAN: More generalizable Language Models with Instruction Fine-Tuning

- This blog (and article) explores instruction fine-tuning, which aims to make language models better at performing NLP tasks with zero-shot inference.

- HELM - Holistic Evaluation of Language Models

- HELM is a living benchmark to evaluate Language Models more transparently.

- General Language Understanding Evaluation (GLUE) benchmark

- This paper introduces GLUE, a benchmark for evaluating models on diverse natural language understanding (NLU) tasks and emphasizing the importance of improved general NLU systems.

- SuperGLUE

- This paper introduces SuperGLUE, a benchmark designed to evaluate the performance of various NLP models on a range of challenging language understanding tasks.

- ROUGE: A Package for Automatic Evaluation of Summaries

- This paper introduces and evaluates four different measures (ROUGE-N, ROUGE-L, ROUGE-W, and ROUGE-S) in the ROUGE summarization evaluation package, which assess the quality of summaries by comparing them to ideal human-generated summaries.

- BLEU: a Method for Automatic Evaluation of Machine Translation

- Measuring Massive Multitask Language Understanding (MMLU)

- This paper presents a new test to measure multitask accuracy in text models, highlighting the need for substantial improvements in achieving expert-level accuracy and addressing lopsided performance and low accuracy on socially important subjects.

- BigBench-Hard - Beyond the Imitation Game: Quantifying and Extrapolating the Capabilities of Language Models

- The paper introduces BIG-bench, a benchmark for evaluating language models on challenging tasks, providing insights on scale, calibration, and social bias.