GenAI with LLMs (1) Fundamentals

This post covers fundamentals from the Generative AI With LLMs course offered by DeepLearning.AI.

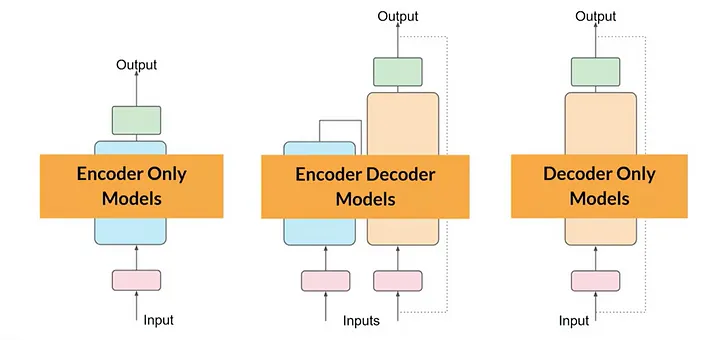

Transformer variants

Encoder only models

-

Also known as Autoencoding models.

-

Input sequence and output sequence are the same length.

-

Use cases

- Sentiment analysis

- Named entity recognition

- Word classification

-

Examples

Encoder Decoder models

-

Also known as Seq2Seq models.

-

Input sequence and output sequence can be different lengths.

-

Use cases

- Translation

- Text summarization

- Question answering

-

Examples

Decoder only models

-

Also known as Autoregressive models.

-

Widely used today. Can generalize to most tasks.

-

Use cases

- Text generation

-

Examples

Prompt engineering

In-context learning

In-context learning: including examples of the task that you want the model to carry out inside the prompt can get the model to produce better outcomes. Providing examples inside the context window is called in-context learning.

Zero-shot, one-shot, few-shot

Zero-shot inference: including zero examples.

One-shot inference: including a single example.

Few-shot inference: including several examples.

Note:

-

Large LLMs can do well with zero-shot inference, smaller LLMs struggle with zero-shot inference and benefit by learning examples of the desired behavior.

-

You have a limit on the amount of in-context learning that you can pass to the model. If you find your model isn’t performing well when including 5 or 6 examples, you should try fine-tuning your model instead.

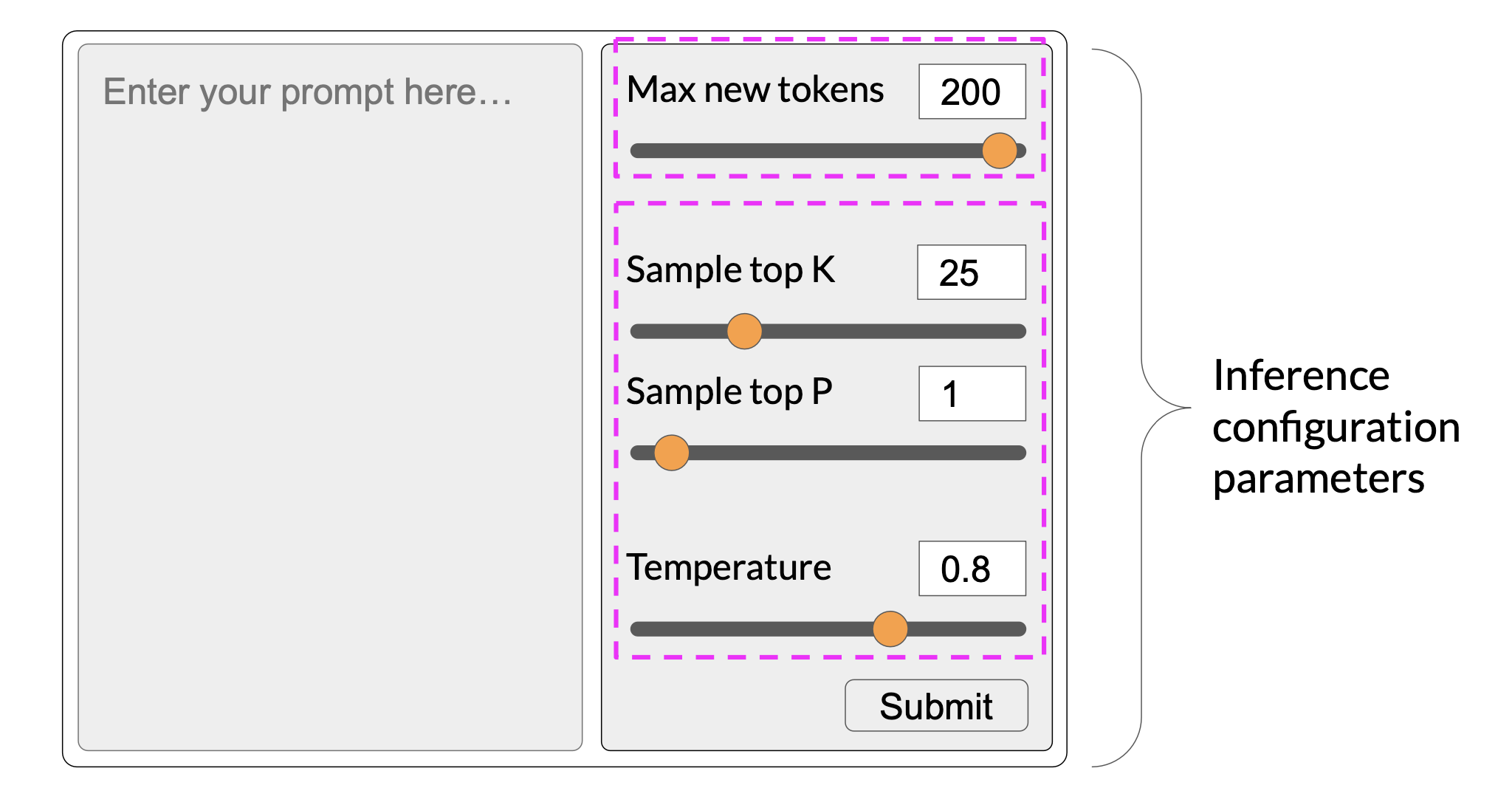

Generative configuration - inference parameters

Max new tokens

- Used to limit the number of tokens that the model will generate. You can think of this as putting a cap on the number of times the model will go through the selection process (i.e., from computing probability distribution to sampling or selecting the next token).

- Remember it’s max new tokens, not a hard number of new tokens generated.

Top-k and Top-p

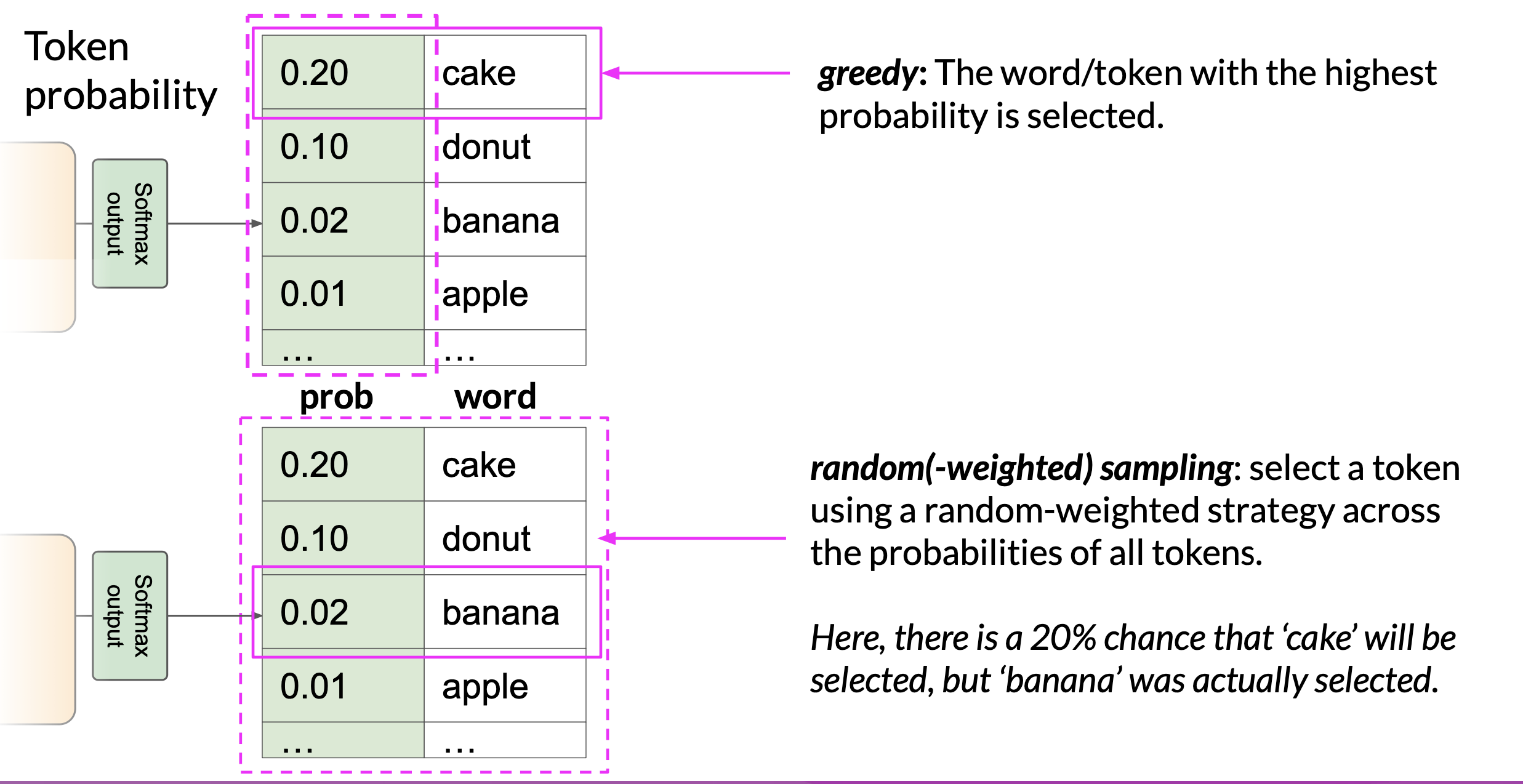

Recall that the output from the transformer’s softmax layer is a probability distribution across the entire dictionary of words that the model uses.

Greedy decoding

- Model chooses the word/token with the highest probability.

- This method can work very well for short generation but is susceptible to repeated words or repeated sequences of words. If you want to generate texts that’s more natural, more creative, and avoid repeating words, you need to use some other controls.

Random (weighted) sampling

- The model chooses the output word at random using the probability distribution to weight the selection.

- Reduce the likelihood that words will be repeated. However, depending on the setting, there is a possibility that the output may be too creative, producing words that cause the generation to wander off into topics or words that just don’t make sense.

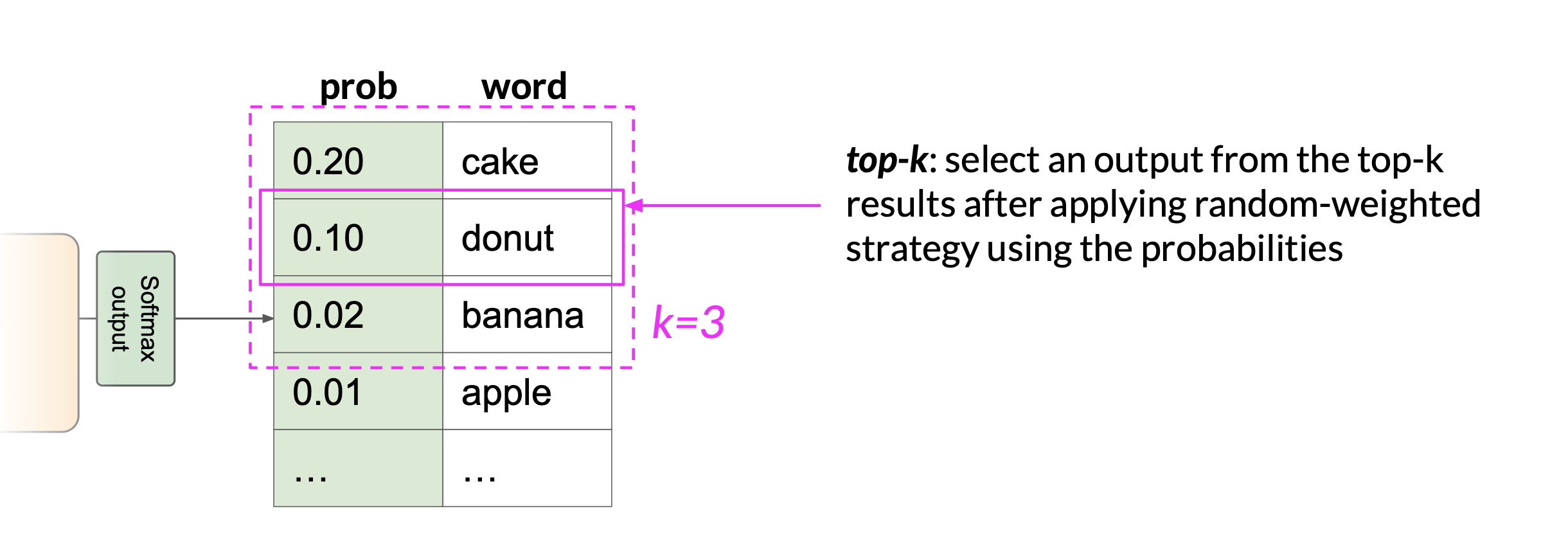

Top-k and top-p are sampling methods we can use to limit the random sampling and increase the chance that the output will be sensible.

Top-k

- The model to choose from only the \(k\) tokens with the highest probability. The model then selects from these options using the probability weighting

- This method can help the model have some randomness while preventing the selection of highly improbable completion words. This in turn makes your text generation more likely to sound reasonable and to make sense.

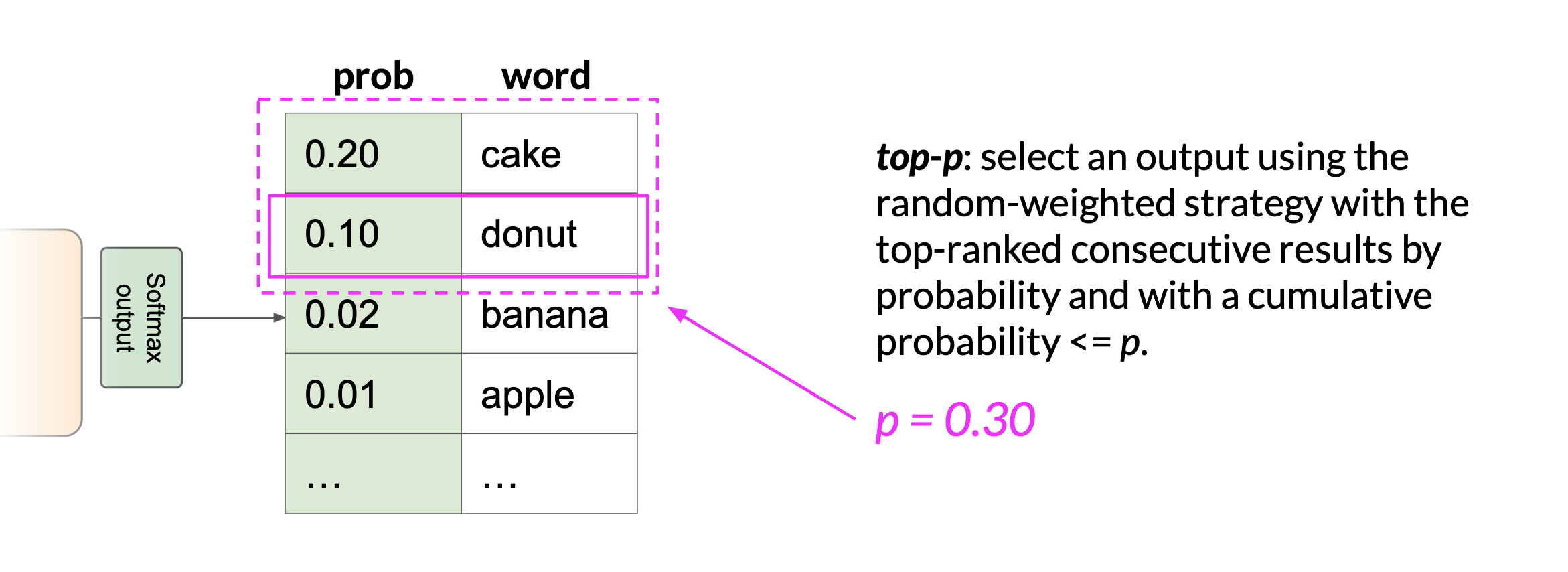

Top-p

Limit the random sampling to the predictions whose combined probabilities do not exceed \(p\).

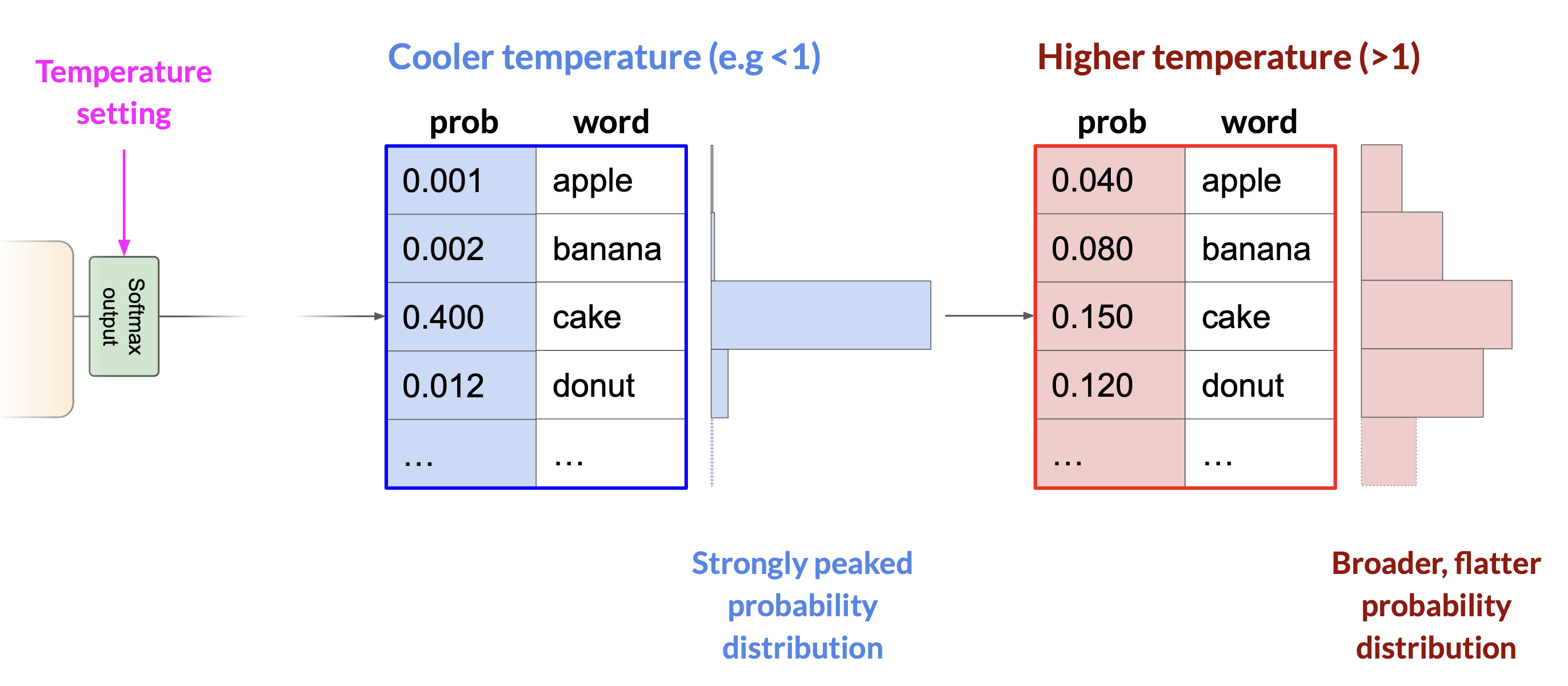

Temperature

- This parameter influences the shape of the probability distribution that the model calculates for the next token.

- The temperature is a scaling factor that’s applied within the final softmax layer of the model that impacts the shape of the probability distribution of the next token. In contrast to top-k and top-p, changing temperature actually alters the predictions the model will make.

- Cooler temperature (\(<1\)) - strongly peaked distribution.

- Warmer temperature (\(>1\)) - broader, flatter distribution.

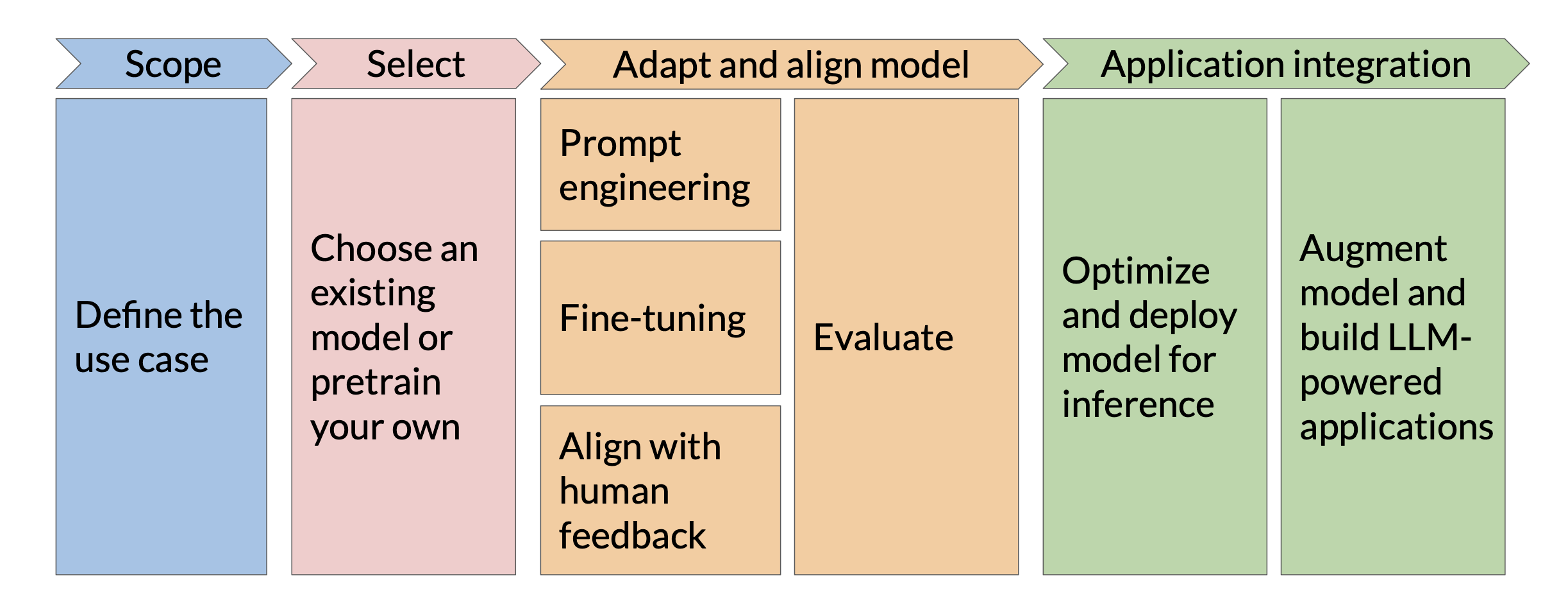

GenAI lifecycle

References

-

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., … & Polosukhin, I. (2017). Attention Is All You Need. arXiv preprint arXiv:1706.03762.

- The Transformer architecture, with the core “self-attention” mechanism.

-

Radford, A., Narasimhan, K., Salimans, T., & Sutskever, I. (2018). Improving Language Understanding by Generative Pre-Training. OpenAI Technical Report.

- BERT

-

Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D., Levy, O., Lewis, M., Zettlemoyer, L., & Stoyanov, V. (2019). RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv preprint arXiv:1907.11692.

- RoBERTa

-

Raffel, C., Shazeer, N., Roberts, A., Lee, K., Narang, S., Matena, M., … & Liu, P. J. (2020). Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. arXiv preprint arXiv:1910.10683.

- T5

-

Lewis, M., Liu, Y., Goyal, N., Ghazvininejad, M., Mohamed, A., Levy, O., … & Zettlemoyer, L. (2020). BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. arXiv preprint arXiv:1910.13461.

- BART

-

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., … & Amodei, D. (2020). Language Models are Few-Shot Learners. arXiv preprint arXiv:2005.14165.

- GPT-3

-

BigScience Workshop. (2022). BLOOM: A 176B-Parameter Open-Access Multilingual Language Model. arXiv preprint arXiv:2211.05100.

- BLOOM

-

AI21 Labs. (2021). Jurassic-1: Technical Details and Evaluation. AI21 Labs Blog.

- Jurassic

-

OpenAI. (2023). Language models can explain neurons in language models. arXiv preprint arXiv:2302.13971.

- LLaMA